Google Algorithm Update History

Learn how Google’s algorithm has developed over time, what drove changes, and what it means for search and your own web content.

Intro

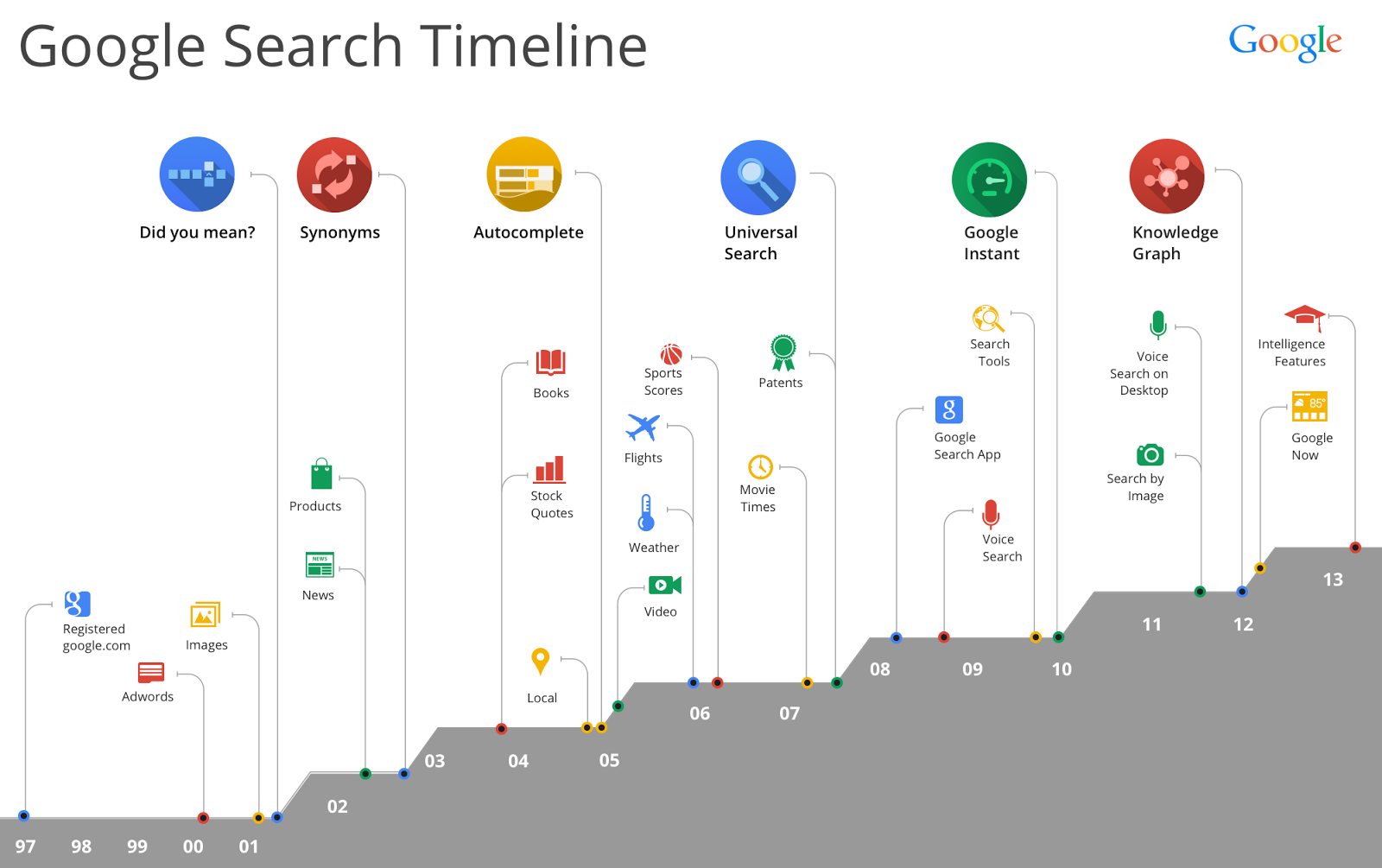

The Google algorithm is constantly changing. In 2018 alone, Google ran 15,096 Live traffic experiments, and launched 3,234 updates to its search algorithm.

Not all updates have significant impact on the search results. This page covers the top 150 updates to how search results function from 2000-2019. Updates are a blend of changes to:

- Algorithms

- Indexation

- Data (aka Data Refreshes)

- Google Search UIs

- Webmaster Tools

- Changes to ranking factors and signals

Before we get into the timeline of individual google updates, it’s going to be helpful to define a handful of things upfront for any SEO newbies out there:

Google’s Core Algorithm

SEO experts, writers, and audiences will often refer to “Google’s Core Algorithm” as though it is a single item. In reality, Google’s Core Algorithm is made up of millions of smaller algorithms that all work together to surface the best possible search results to users. What we mean when we say “Google’s Core Algorithm” is the set of algorithms that are applied to every single search, which are no longer considered experimental, and which are stable enough to run consistently without requiring significant changes.

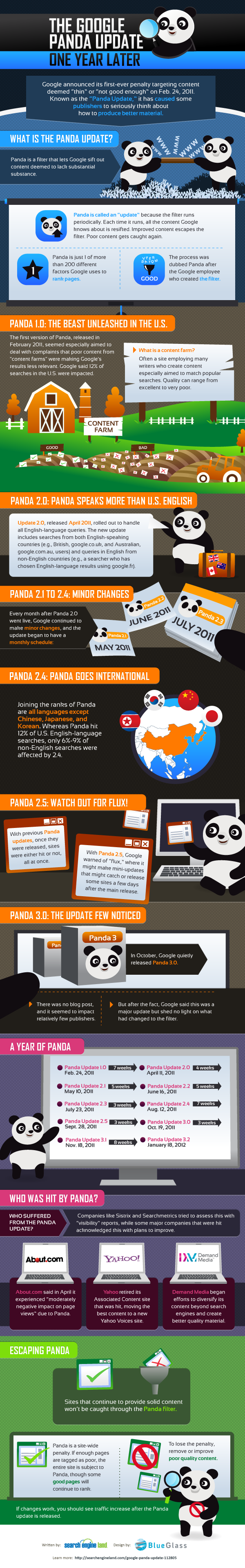

Google Panda (2011-2016)

The Panda algorithm focused on removing low quality content from search by reviewing on-page content itself. This algorithm focused on thin content, content dominated by ads, poor quality content (spelling/grammar mistakes), and rewarded unique content. Google Panda was updated 29 times before finally being incorporated into the core algorithm in January of 2016.

Google Penguin (2012-2016)

The Penguin algorithm focused on removing sites engaging in spammy tactics from the search results. Penguin primarily filtered sites engaging in keyword stuffing and link schemes out of the search results. Google Penguin was updated 10 times before being integrated into Google’s core algorithm in September of 2016.

RankBrain (2015-Present)

This machine-learning based AI helps Google process and understand the meaning behind new search queries. RankBrain works by being able to infer the meaning of new words or terms based on context and related terms. RankBrain began rolling out across all of Google search in early 2015 and was fully live and global by mid-2016. Within three months of full deployment RankBrain was already the 3rd most important signal contributing to the results selected for a search query.

Matt Cutts

One of the first 100 employees at Google, Matt Cutts was the head of Google’s Web Spam team for many many years, and interacted heavily with the webmaster community. He spent a lot of time answering questions about algorithm changes and providing webmasters high-level advice and direction.

Danny Sullivan

Originally a Founding Editor, Advisor, and Writer for Search Engine Land (among others), Danny Sullivan now communicates with the SEO community as Google’s Public Search Liaison. Mr. Sullivan frequently finds himself reminding the community that the best way to rank is to create quality content that provides value to users.

Gary Illyes

Google Webmaster Trends Analyst who often responds to the SEO community when they have questions about Google algorithm updates and changes. Gary is known for his candid (and entertaining) responses, which usually have a heavy element of sarcasm.

Webmaster World:

Frequently referenced whenever people speak about Google algorithm updates, webmasterworld.com is one of the most popular forums for webmasters to discuss changes to Google’s search results. A popular community since the early 2000’s webmasters still flock to the space whenever major fluctuations are noticed to discuss theories.

Years.

Tags.

2021 Google Search Updates

2021 December – Local Search Update

From November 30th – December 8th, Google runs a local search ranking update. This update rebalances the various factors used to generate local results. Primary ranking factors for local search remain the same: Relevance, Distance, and Prominence.

Additional Reading:

2021 November – Core Quality Update

From November 17th – November 30th, Google rolls out another core update. As with all core updates, this one is focused on improving the quality and relevance of search results.

Additional Reading:

2021 August – Title Tag Update

Starting August 16th, Google starts rewriting page titles in the SERPs. After many SEOs saw negative results from the update, Google rolls back some of the changes in September. Google emphasizes that it still uses content with the <title> tag over 80% of the time.

Additional Reading:

2021 July – Link Spam Update

Google updates link spam fighting algorithm to improve effectiveness of identifying and nullifying link spam. The update is particularly focused on affiliate sites and those websites who monetize through links.

Additional Reading:

- A Reminder on Qualifying Links and Our Link Spam Update

- How Affiliates Can Work With Google to Reduce Link Spam

2021 June – Page Experience Update

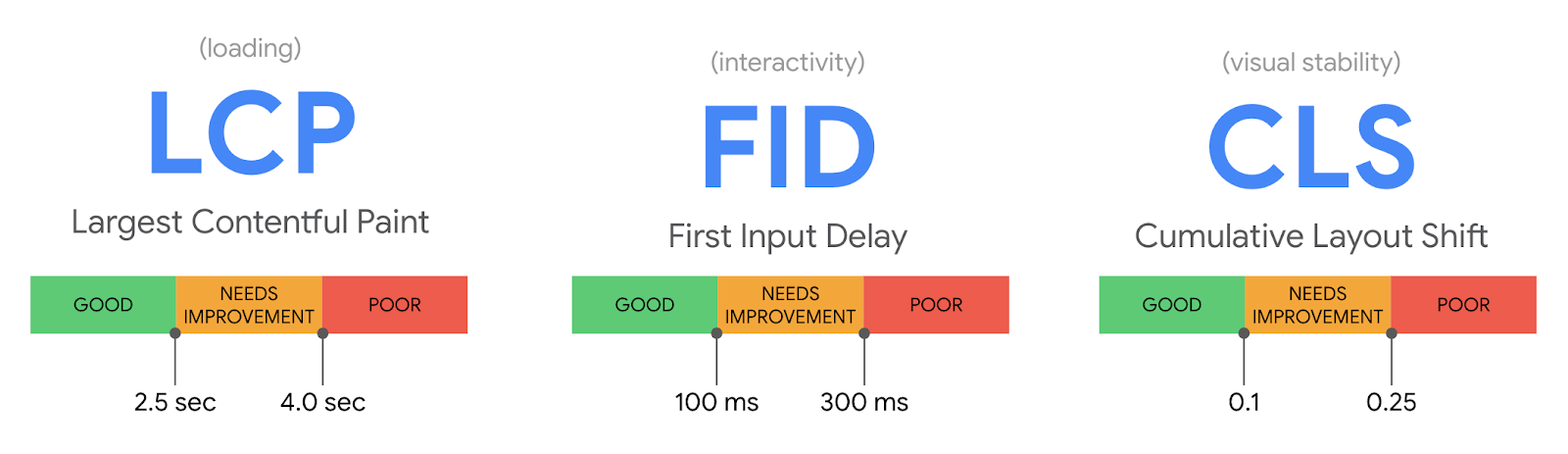

Google announced in late 2020 that its upcoming 2021 Page Experience update would introduce core web vitals as new Google ranking factors. Core web vitals are a set of user experience criteria that include page load times, mobile responsiveness, visual responsiveness, and more. Google evaluates these metrics through the following criteria:

- Largest Contentful Paint (LCP) – The time it takes a web page to load the largest piece of content on the page

- First Input Delay (FID) – A measurement of the users first interaction with the page from interactivity and responsiveness.

- Cumulative Layout Shift (CLS) – Measures visual stability and how stable the website is when loading and scrolling

This update makes it so Google will evaluate page experiences signals like mobile friendliness, safe browsing, HTTPS security, and intrusive interstitial guidelines when ranking web pages.

Additional Reading:

- The Page Experience Update: New SEO Ranking Factors for 2021

- Evaluating Page Experience for a Better Web

2021 February – Passage Ranking

Google introduces Passage Ranking and starts indexing passages of web content. Google now hones in on a specific passages of long-form content and ranks those passage in the SERPs. Google highlights the relevant passage and takes the users directly to the relevant passage after clicking on the blue link result.

Additional Reading:

2020 Google Search Updates

2020 October – Indexing Bugs

From early September to the beginning of October, Google experienced multiple bugs with mobile indexing, canonicalization, news-indexing, top stories carousel, and sports scores breaking. The bugs impacted about .02% of searches. Google fully resolved all impacted urls by October 9th.

Additional Reading:

2020 August 11 – Google Glitch

On Tuesday, August 11th, Google experienced a massive, worldwide indexing glitch that impacted search results. Search results were very low-quality or irrelevant to search queries, and ecommerce sites in particular reported significant impacts on rankings. Google resolved the glitch within a few days.

Additional Reading:

2020 June – Google Bug Fix

A Google representative confirmed an indexing bug temporarily impacted rankings. Google was struggling to surface fresh content.

Additional Reading:

2020 May – Core Quality Update

This May 2020 core update was one of the more significant broad core updates with the introduction of core web vitals and increased emphasis on E.A.T. This update was a continuation of an effort to improve the quality of SERP results with COVID related searches. The update most significantly impacted those sites with low-quality or unnatural links. However some sites with lower-domain authority did appear to see positive ranking improvements for pages with high-quality, relevant content.

Later today, we are releasing a broad core algorithm update, as we do several times per year. It is called the May 2020 Core Update. Our guidance about such updates remains as we’ve covered before. Please see this blog post for more about that:https://t.co/e5ZQUAlt0G

— Google SearchLiaison (@searchliaison) May 4, 2020

Many SEOs reacted negatively, particularly because of the timing of the update, which occurred at the height of economic shutdowns to slow the spread of coronavirus. Some concerns about the May 2020 core quality update ranged from social media SERP domination and better SERP results for larger, more dominant brands like Amazon and Etsy. Some analysis noted these changes may have been reflecting user intent from quarantine, particularly because the update focused on providing better results for queries with multiple search intents. Google’s responded to the complaints by reinforcing existing content-quality signals.

Additional Reading:

- An Intensive Analysis of the May 2020 Google Update by MHC

- Google May 2020 Algorithm Update: 4 Key Changes and how to Adjust

2020 March – COVID-19 Pandemic

Although not an official update, the coronavirus outbreak led to an unprecedented level of search queries that temporarily changed the landscape of search results. Google made several changes to adjust to the trending searches such as:

- Increased user personalization to combat misinformation

- Removed COVID-19 misinformation across YouTube and other platforms

- Added “Sticky Menu” for COVID related searches

- Added temporary business closures to the Map Pack

- Temporarily banned ads for respirators and medical masks

- Created COVID-19 Community Mobility Reports

- Temporary limited certain Google My Business listings features

Additional Reading:

2020 February 7 – Unannounced Update

In February of 2020, many SEOs reported seeing significant changes to rankings, although Google had not announced and denied any broad core update. Various analysis of the update showed no clear pattern between websites that were impacted.

Additional Reading:

- Google Update February 2020: Background and Analysis

- Google responds to February 2020 update saying: “We do Updates all the Time”

2020 January 22 – Featured Snippet De-duplication

Prior to this January 2020 update, those sites that earned the featured snippet, or “position zero,” also appeared as the subsequent organic search result. This update de-duplicated search results to eliminate this double exposure. This impacted 100% of searches worldwide and had significant impacts on rank tracking and organic CTR.

Additional Reading:

2020 January – Broad Core Update

On January 13th, 2020, Google started rolling out another broad core update. Google did not provide details about the update, but did emphasize existing webmaster guidelines about content quality.

Additional Reading:

2019 Google Search Updates

2019 November Local Search Update

In November of 2019 Google rolled out an update to how local search results are formulated (ex: map pack results). This update improved Google’s understanding of the context of a search, by improving its understanding of synonyms. In essence, local businesses may find they are showing up in more searches.

In early November, we began making use of neural matching as part of the process of generating local search results. Neural matching allows us to better understand how words are related to concepts, as explained more here: https://t.co/ShQm7g9CvN

— Google SearchLiaison (@searchliaison) December 2, 2019

2019 October 26 BERT

In October Google introduced BERT a deep-learning algorithm focused on helping Google understand the intent behind search queries. BERT (Bidirectional Encoder Representations from Transformers) gives context to each word within a search query. The “bidirectional” in BERT refers to how the algorithm looks at the words that come before and after each term before assessing the meaning of the term itself.

Here’s an example of bi-directional context from Google’s Blog:

In the sentence “I accessed the bank account,” a unidirectional contextual model would represent “bank” based on “I accessed the” but not “account.” However, BERT represents “bank” using both its previous and next context — “I accessed the… account” — starting from the very bottom of a deep neural network, making it deeply bidirectional.

The introduction of BERT marked the most significant change to Google search in half a decade, impacting 1 in 10 searches — 10% of all search queries.

Additional Reading:

2019 September – Entity Ratings & Rich Results

If you place reviews on your own site (even through a third party widget), and use schema markup on those reviews – the review stars will no longer show up in the Google results. Google applied this change to entities considered to be Local Businesses or Organizations.

The reasoning? Google considers these types of reviews to be self-serving. The logic is that if a site is placing a third party review widget on their own domain, they probably have some control over the reviews or review process.

Our recommendation? If you’re a local business or organization, claim your Google My Business listing and focus on encouraging users to leave reviews with Google directly.

Additional Reading:

2019 September – Broad Core Update

This update included two components:First, it hit sites exploiting a 301 redirect trick from expired sites. In this trick users would buy either expired sites with good SEO metrics and redirect the entire domain to their site, or users would pay a 3rd party to redirect a portion of pages from an expired site to their domain.Note: Sites with relevant 301 redirects from expired sites were still fine.

Second, video content appears to have gotten a boost from this update. June’s update brought an increase in video carousels in the SERPs. Now in September, we’re seeing video content bumping down organic pages that previously ranked above them.

Additional Reading:

2019 June – Broad Core Update

This is the first time that Google has pre-announced an update. Danny Sullivan, Google’s Search Liaison, stated that they chose to pre-announce the changes so webmasters would not be left “scratching their heads” about what was happening this time.

What happened?

- We saw an increase in video carousels in the SERPs

- Low quality news sites saw losses

What can sites do to respond to this broad core update? It looks like Google is leaning into video content, at least in the short-term. Consider including video as one of the types of content your team creates.

Additional Reading:

2019 May 22-26 – Indexing Bugs

On Wednesday May 22nd Google tweeted that there were indexation bugs causing stale results to be served for certain queries, this bug was resolved early on Thursday May 23rd.

By the evening of Thursday May 23rd Google was back to tweeting – stating that they were working on a new indexing bug that was preventing capture of new pages. On May 26th Google followed up that this indexation bug had also been fixed.

Additional Reading:

2019 April 4-11 De-Indexing Bugs

In April of 2019 an indexing bug caused about 4% of stable URLs to fall off of the first page. What happened? A technical error caused a bug to de-index a massive set of webpages.

Additional Reading:

2019 March 12 – Broad Core Update

Google was specifically vague about this update, and just kept redirecting people and questions to the Google quality guidelines. However, the webmaster community noticed that the update seemed to have a heavier impact on YMYL (your money or your life) pages.

YMYL sites with low quality content took a nose-dive, and sites with heavy trust signals (well known brands, known authorities on multiple topics, etc) climbed the rankings.

Let’s take two examples:

First, Everdayhealth.com lost 50% of their SEO visibility from this update. Sample headline:Can Himalayan Salt Lamps Really Help People with Asthma?

Next, Medicinenet.com saw a 12% increase in their SEO visibility from this update. Sample headline: 4 Deaths, 141 Legionnaires’ Infections Linked to Hot Tubs.

This update also seemed to factor in user behavior more strongly. Domains where users spent longer on the site, had more pages per visit, and had lower bounce rates saw an uptick in their rankings.

Additional Reading:

2019 March 1 – Extended Results Page

For one day, on March 1st, Google displayed 19 results on the first page of SERPs for all queries, 20 if you count the featured snippet. Many hypothesize it was a glitch related to in-depth articles, a results type from 2013 that has long since been integrated into regular organic search results.

Additional Reading:

2018 Google Algorithm Updates

2018 August – Broad Core Update (Medic)

This broad core update, known by its nickname “Medic” impacted YMYL (your money or your life) sites across the web.

SEOs had many theories about what to do to improve rankings after this update, but both Google and the larger SEO community ended up at the same messaging: make content user’s are looking for, and make it helpful.

This update sparked a lot of discussion around E-A-T (Expertise, Authoritativeness, Trustworthiness) for page quality, and the importance of clear authorship and bylines on content.

Additional Reading:

2018 July – Chrome Security Warning

Google begins marking all http sites as “not secure” and displaying warnings to users.

Looking forward, Google is planning on blocking mixed content from https sites.

What can you do? Purchase an SSL certificate and make the move from http to https as soon as possible. Double check that all of your subdomains, images, PDFs and other assets associated with your site are also being served securely.

Additional Reading:

2018 July – Mobile Speed Update

Google rolled out the mobile page speed update, making page speed a ranking factor for mobile results.

Additional Reading:

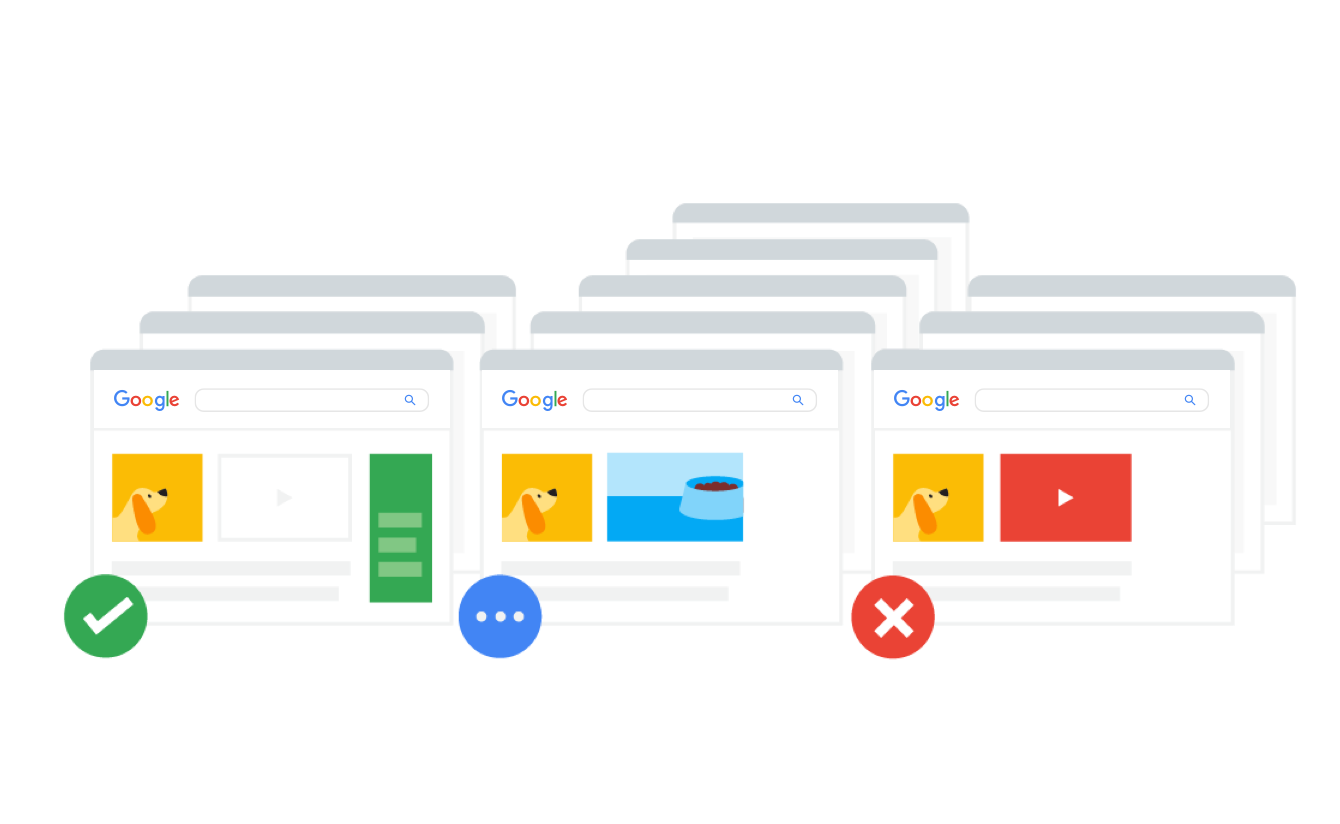

2018 June – Video Carousels

Google introduces a dedicated video carousel on the first page of results for some queries, and moves videos out of regular results. This change also led to a significant increase in the number of search results displaying videos (+60%).

Additional Reading:

2018 April – Broad Core Update

The official line from Google about this broad core update, is that it rewards quality content that was previously under-rewarded. Sites that had content that was clearly better than the content of it’s organic competitors saw a boost, sites with thin or duplicative content fell.

2018 March – Broad Core Update

March’s update focused on content relevance (how well does content match the intent of the searcher) rather than content quality.

What can you do? Take a look at the pages google is listing in the top 10-20 spots for your target search term and see if you can spot any similarities that hint at how Google views the intent of the search.

Additional Reading:

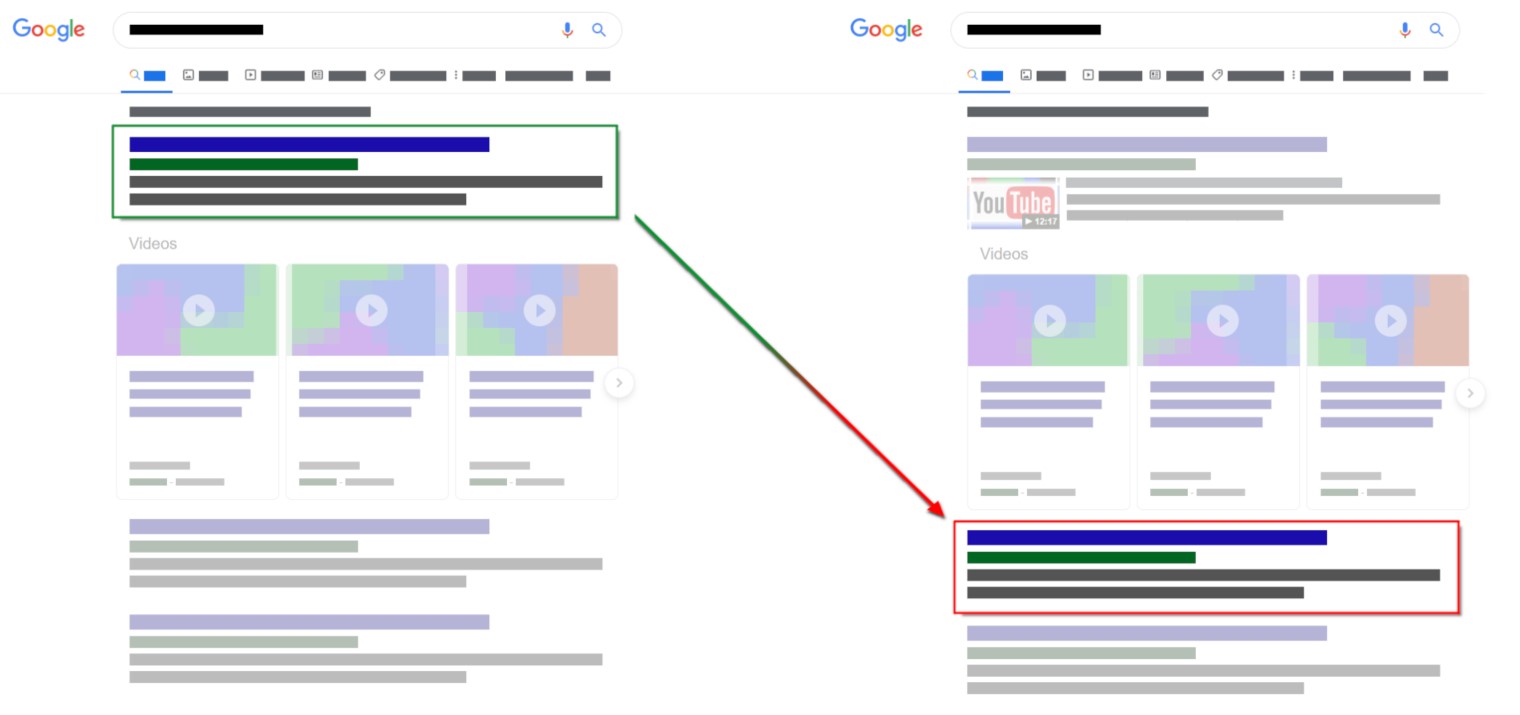

2018 March – Mobile-First Index Starts to Roll Out

After months of testing Google begins rolling out mobile-first indexing. Under this approach, Google crawls and indexes the mobile version of website pages when adding them to their index. If content is missing from mobile versions of your webpages, that content may not be indexed by Google.

To quote Google themselves,

“Mobile-first indexing means that we’ll use the mobile version of the page for indexing and ranking, to better help our – primarily mobile – users find what they’re looking for.”

Essentially the entire index is going mobile-first. This process of migrating over to indexing the mobile version of websites is still underway. Website’s are being notified in Search Console when they’ve been migrated under Google’s mobile-first index.

2017 Google Search Updates

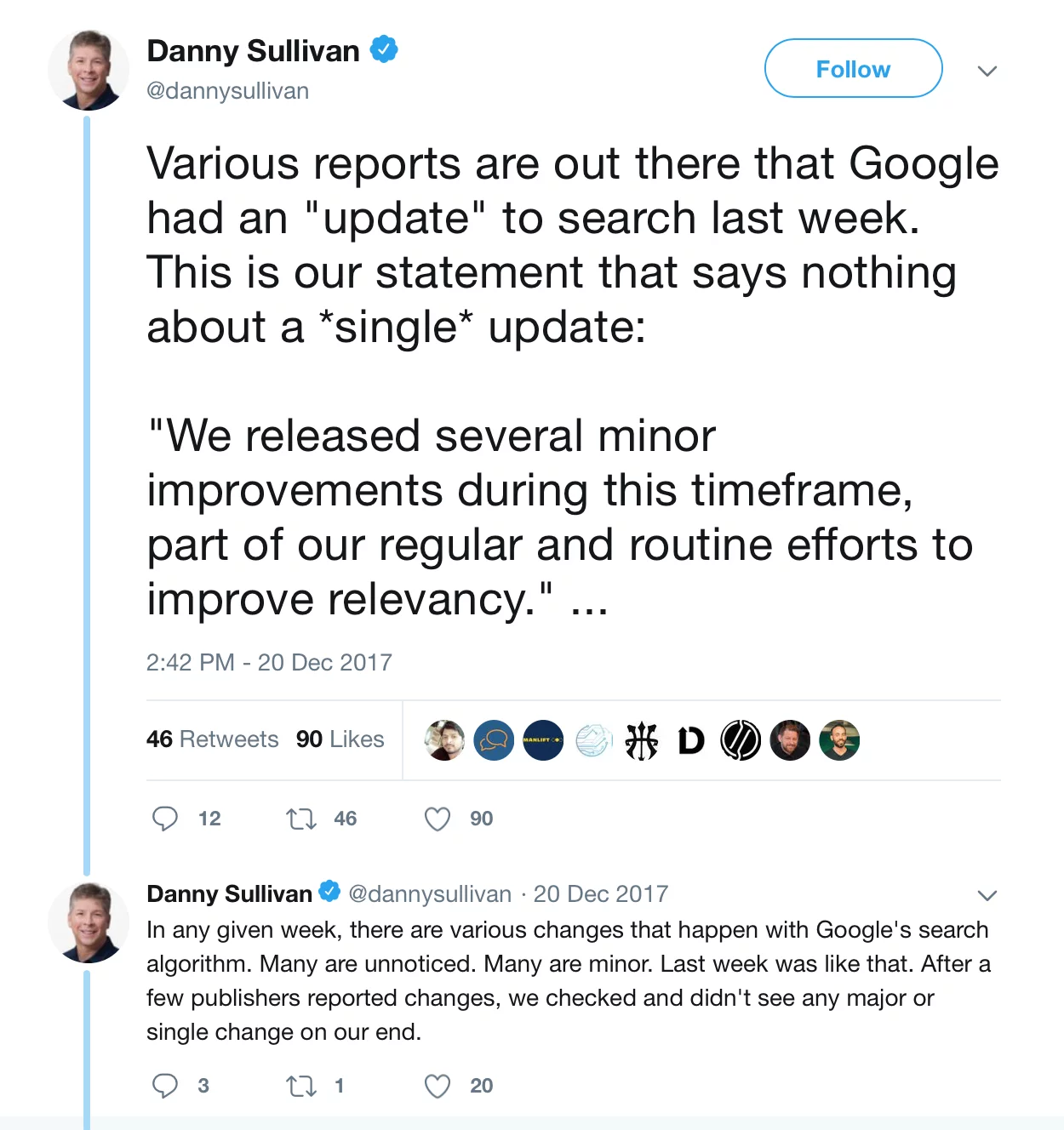

2017 December – Maccabees

Google states that a series of minor improvements are rolled out across December. Webmasters and SEO professionals see large fluctuations in the SERPs.

What were the Maccabees changes?

Webmasters noted that doorway pages took a hit. Doorway pages act as landing pages for users, but don’t contain the real content – users have to get past these initial landing pages to access content of any value. Google considers these pages barriers to a user.

A writer at Moz dissected a slew of site data from mid-december noted one key observation. When two pages ranked for the same term, the one with better user engagement saw it’s rankings improve after this update. The other page saw its rankings drop. In many instances what happened for sites that began to lose traffic, is that blog pages were being shown/ranked where product or service pages should have been displayed.

A number of official celebrity sites fall in the rankings including (notably) Channing Tatum, Charlie Sheen, Kristen Stewart, Tom Cruise, and even Barack Obama. This speaks to how Google might have rebalanced factors around authoritativeness vs. content quality. One SEO expert noted that thin celebrity sites fell while more robust celebrity sites (like Katy Perry’s) maintained their #1 position.

Multiple webmasters reporting a slew of manual actions on December 25th and 26th, and some webmasters also reported seeing jumps on the 26th for pages that had been working on site quality.

Additional Reading:

2017 November – Snippet Length Increased

Google increases the character length of meta descriptions to 300 characters. This update was not long-lived as Google rolled back to the original 150-160 character meta descriptions on May 13, 2018.

2017 May – Quality Update

Webmasters noted that this update targeted sites and pages with:

- Deceptive advertising

- UX challenges

- Thin or low quality content

Additional Reading:

2017 March – Fred

In early March webmasters and SEOs began to notice significant fluctuations in the SERPs, and Barry Schwartz from SEJ began tweeting Google to confirm algorithm changes.

The changes seemed to target content sites engaging in aggressive monetization at the expense of users. Basically sites filling the internet up with low-value content, meant to benefit everyone except the user. This included PBN sites, and sites created with the sole intent of generating AdSense income.

Fred got its name from Gary Illyes who suggested to an SEO expert asking if he wanted to name the update, that we should start calling all updates without names “Fred.”

Fred it is https://t.co/aEE7sfm7X5 @i_praveensharma @JohnMu

— Barry Schwartz (@rustybrick) March 9, 2017

The joke, for anyone who knows the webmaster trends analyst, is that he calls everything unnamed fred (fish, people, EVERYTHING).

What those who aren’t friends with @methode don’t realize is that he calls EVERYTHING un-named, fred. Fish, people, whatever.

— Ryan Jones (@RyanJones) March 9, 2017

The SEO community took this as a confirmation of recent algorithm changes (note: literally every day has algorithm updates). Validating them digging into the SERP Changes.

Additional Reading:

2017 January 10 – Pop Up Penalty

Google announces that intrusive pop ups and interstitials are going to be factored into their search algorithm moving forward.

“To improve the mobile search experience, after January 10, 2017, pages where content is not easily accessible to a user on the transition from the mobile search results may not rank as highly.”

This change caused rankings to drop for sites that forced users to get past an ad or pop up to access relevant content. Not all pop ups or interstitials were penalized, for instance the following pop ups were still okay:

- Pop ups that helped sites stay legally compliant (ex: accepting cookies, or verifying a user’s age).

- Pop ups that did not block content on load.

Additional Reading:

2016 Google Search Updates

2016 September – Penguin 4.0

The Google announcement of Penguin 4.0 had two major components:

- Penguin had been merged into the core algorithm, and would now have real-time updates.

- Penguin would be more page-specific moving forward rather than impacting entire domains.

SEOs also noted one additional change. Penguin 4.0 seemed to just remove the impact of spam links on SERPs, rather than penalizing sites with spammy links. This appeared to be an attempt for Google to mitigate the impact of negative SEO attacks on sites.

That being said, today in 2019 we still see a positive impact from running disavows for clients who have seen spammy links creep into their backlink profiles.

Additional Reading:

2016 September – Possum Update

This update targeted duplicate and spammy results in local search (Local Pack and Google Maps). The goal being to provide more diverse results when they’re searching for a local business, product, or service.

Prior to the Possum update Google was filtering out duplicates in local results by looking for listings with matching domains or matching phone numbers. After the Possum update Google began filtering out duplicates based on their physical address.

Businesses who saw some of their listings removed from the local pack may have initially thought their sites were dead (killed by this update), but they weren’t – they were just being filtered (playing possum). The term was coined by Phil Rozek

SEOs also noted that businesses right outside of city limits also saw a major uptick in local rankings, as they got included in local searches for those cities.

Additional Reading:

2016 May – Mobile Friendly Boost

Google boosts the effect of the mobile-friendly ranking signal in search.

Google took time to stress that sites which are not mobile friendly but which still provide high quality content will still rank.

Additional Reading:

2016 February 19 – Adwords Change

Google Removes sidebar ads and ads a fourth ad to the top block above the organic search results.

This move reflects the search engine giant continuing to prioritize mobile-first experiences, where side-bar ads are cumbersome compared to results in the main content block.

2016 January – Broad Core Update + Panda Is Now Core

Google Confirms core algorithm update in January, right after confirming that Panda is now part of Google’s core algorithm.

Not a lot of conclusions were able to be drawn about the update, but SEOs noticed significant fluctuations with news sites/news publishers. Longform content with multi-media got a boost, and older articles took a bit of a dive for branded terms. This shift could reflect Google tweaking current-event related results to show more recent content, but the data was not definitive.

Additional Reading:

2015 Google Search Updates

2015 December – SSL/HTTPS by Default

Google starts indexing the https version of pages by default.

Pages using SSL are also seeing a slight boost. Google holds security as a core component of surfacing search results to users, and this shift becomes one of many security-related search algo changes. In fact, by the end of 2017 over 75% of the page one organic search results were https.

2015 October 26 – RankBrain

in testing since April 2015, Google officially introduced RankBrain on this date. RankBrain is a machine learning algorithm that filters search results to help give users a best answer to their query. Initially, RankBrain was used for about 15 percent of queries (mainly new queries Google had never seen before), but now it is involved in almost every query entered into Google. RankBrain has been called the third most important ranking signal.

Additional Reading:

2015 October 5 – Hacked Sites Algorithm

Google introduces an algorithm specifically targeting spammy in the search results that were gaining search equity from hacked sites.

This change was significant, it impacted 5% of search queries. This algorithm hides sites benefiting from hacked sites in the search results.

@rustybrick yesr. That’s what we’re aiming for.

— Gary “鯨理” Illyes (@methode) October 9, 2015

The update came right after a September message from Google about cracking down on repeat spam offenders. Google’s blog post notified SEOs that sites which repeatedly received manual actions would find it harder and harder to have those manual actions reconsidered.

Additional Reading:

2015 August 6 – Google Snack Pack

Google switches from displaying seven results for local search in the map pack to only three.

Why the change? Google is switching over (step-by-step) to mobile-first search results, aka prioritizing mobile users over desktop users.

On mobile, only three local results fit onto the screen before a users needs to scroll. Google seems to want users to scroll to then access organic results.

Other noticeable changes from this update:

- Google only displays the street (not the complete address) unless you click into a result.

- Users can now filter snack pack results by rating using a dropdown.

Additional Reading:

2015 July 18- Panda 4.2 (Update 29)

Roll out of Panda 4.2 began on the weekend of July 18th and affected 2-3% of search queries. This was a refresh, and the first one for Panda in about 9 months.

Why does that matter? The Panda algorithm acts like a filter on search results to sort out low quality content. Panda basically gets applied to a set of data – and decides what to filter out (or down). Until the data for a site is refreshed, Panda’s ruling is static. So when a data refresh is completed, sites that have made improvements essentially get a revised ruling on how they’re filtered.

Nine months is a long time to wait for a revised ruling!

2015 May – Quality Update / Phantom II

This change is an update to the quality filters integrated into Google’s core algorithm, and alters how the algorithm processes signals for content quality. This algorithm is real-time, meaning that webmasters will not need to wait for data refreshes to see positive impact from making content improvements.

What kind of pages did we see drop in the rankings?

- Clickbait content

- Pages with disruptive ads

- Pages where videos auto-played

- How-to sites with thin or duplicative content (this ended up impacting a lot of how-to sites)

- Pages that were hard to navigate/had UI barriers

In hindsight, this update feels like a precursor to Google’s 2017 updates for content spam and intrusive pop ups.

Additional Reading:

2015 April 21 – Mobilegeddon

Google boosts mobile-friendly pages in mobile search results.

This update was termed Mobilegeddon as SEOs expected it to impact a huge number of search queries, maybe more than any other update ever had. Why? Google was already seeing more searches on mobile than on desktop in the U.S. in May 2015.

In 2018 Google takes this a step further and starts mobile-first indexing.

Additional Reading:

2014 Google Algorithm Updates

2014 December – Pigeon Goes International

Google’s local algorithm, known as Pigeon, expands to international English speaking countries (UK, Canada, Australia) on December 22, 2014.

In December Google also releases updated guidelines for local businesses representing themselves on Google.

Additional Reading:

2014 October – Pirate II

Google releases an “improved DMCA demotion signal in Search,” specifically designed to target and downrank some of the sites most notorious for piracy.

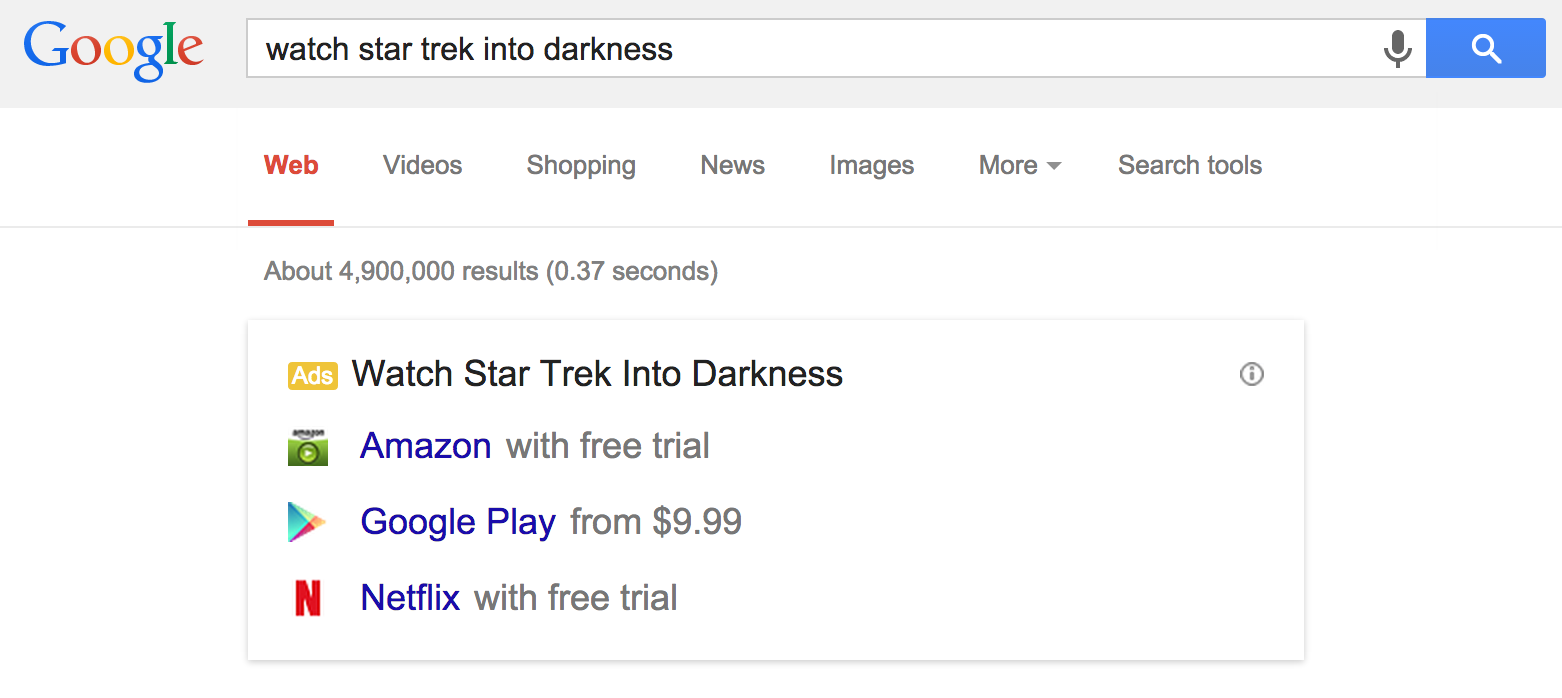

In October Google also released an updated report on how they fight piracy, which includes changes they made to how results for media searches were displayed in search. Most of these user interface changes were geared towards helping user find legal (trusted) ways to consume the media content they were seeking.

—————————–

2014 October 17 – Penguin 3.0

This update impacted 1% of English search queries, and was the first update to Penguin’s algorithm in over a year. This update was both a refresh and a major algorithm update.

2014 September – Panda 4.1 (Update 28)

Panda 4.1 is the 28th update for the algorithm that targets poor quality content. This update impacted 3-5% of search queries.

To quote Google:

“Based on user (and webmaster!) feedback, we’ve been able to discover a few more signal to help Panda identify low-quality content more precisely. This results in a greater diversity of high-quality small- and medium-sized sites ranking higher, which is nice.”

Major losers were sites with deceptive ads, affiliate sites (thin on content, meant to pass traffic to other monetizing affiliates), and sites with security issues.

2014 September – Known PBNs De-Indexed

This change impacted search, but was not an algorithm change, data refresh, or UI update.

Starting mid-to-late September, 2014 Google de-indexed a massive amount of sites being used to boost other sites and game Google’s search rankings.

Google then followed-up on the de-indexing with manual actions for sites benefiting from the PBN. These manual actions went out on September 18, 2014.

Additional Reading:

2014 August – Authorship Removed from Search Results

Authors are no longer displayed (name or photo) in the search results along with the pieces that they’ve written.

Almost a year later Gary Illyes suggested that sites with authorship markup should leave the markup in place because it might be used again in the future. However, at a later date it was suggested that Google is perfectly capable of recognizing authorship from bylines.

Additional Reading:

2014 August – SSL becomes a ranking factor

Sites using SSL began to see a slight boost in rankings.

Google would later go on to increase this boost, and eventually provide warning to users when they were trying to access unsecure pages.

Additional Reading:

2014 July 24 – Google Local Update (Pigeon)

Google’s local search algorithm is updated to include more signals from traditional search (knowledge graph, spelling correction, synonyms, etc).

Additional Reading

2014 June – Authorship Photos Removed

Photos of Authors are gone from SERPs.

This was the first step towards Google decommissioning Authorship markup.

2014 June – Payday Loan Update 3.0

Where Payday Loans 2.0 targeted spammy sites, Payday Loans 3.0 targeted spammy queries, or more specifically the types of illegal link schemes scene disproportionately within high-spam industries (payday loans, porn, gambling, etc).

What do you mean illegal? We mean link schemes that function off of hacking other websites or infecting them with malware.

This update also included better protection against negative SEO attacks,

Additional Reading:

2014 May 17-18 – Payday Loan Update 2.0

Payday Loan Update 2.0 was a comprehensive update to the algorithm (not just da data refresh). This update focused on devaluation of domains using spamy on-site tactics such as cloaking.

Cloaking is when the content/page that google can see for a page is different than the content/page that a human user sees when they click on that page from the SERPs.

2014 May – Panda 4.0 (Update 27)

Google had stopped announcing changes to Panda for a while, so when they announced Panda 4.0 we know it was going to be a larger change to the overall algorithm.

Panda 4.0 impacted 7.5% of English queries, and led to a drastic nose dive for a slew of prominent sites like eBay, Ask.com, and Biography.com.

@mattcutts I hope it is not too painful 😉 pic.twitter.com/VUmBJ59IEG

— Steven Broschart (@optimizingexp) May 21, 2014

2014 February 6 – Page Layout 3.0 (Top Heavy 3.0)

This is a refresh of Google’s algorithm that devalues pages with too many above-the-fold ads, per Google’s blog:

We’ve heard complaints from users that if they click on a result and it’s difficult to find the actual content, they aren’t happy with the experience. Rather than scrolling down the page past a slew of ads, users want to see content right away.

So sites that don’t have much content “above-the-fold” can be affected by this change. If you click on a website and the part of the website you see first either doesn’t have a lot of visible content above-the-fold or dedicates a large fraction of the site’s initial screen real estate to ads, that’s not a very good user experience.

The Page Layout algorithm was originally launched on January 19, 2012, and has only had one other update in October of the same year (2012).

2013 Google Algorithm Updates

2013 December – Authorship Devalued

Authorship gets less of a boost in the search results. This is the first step Google took in beginning to phase out authorship markup.

2013 October – Penguin 2.1

Technically the 5th update to Google’s link-spam fighting algorithm, this minor update affects about 1% of search queries.

Penguin 2.1 launching today. Affects ~1% of searches to a noticeable degree. More info on Penguin: https://t.co/4YSh4sfZQj

— Matt Cutts (@mattcutts) October 4, 2013

2013 August – Hummingbird

Hummingbird was a full replacement of the core search algorithm, and Google’s largest update since Caffeine (Panda and Penguin had only been changes to portions of the old algorithm).

Humminbird helped most with conversational search for results outside of the knowledge graph — where conversational search was already running. Hummingbird was a significant improvement to how google interpreted the way text and queries are typed into search.

This algorithm was named Hummingbird by Google because it’s “precise and fast.”

2013 July – Expansion of Knowledge Graph

Knowledge Graph Expands to nearly 25% of all searches, displaying information-rich cards right above or next to the organic search results.

Additional Reading:

2013 July – Panda Dance (Update 26)

Panda begins going through monthly refreshes, also known as the “Panda Dance,” which caused monthly shifts in search rankings.

The next time Google would acknowledge a formal Panda update outside of these refreshes would be almost a year later in May of 2014.

2013 June – Roll Out Anti-Spam Algorithm Changes

Google rolled out an anti-link-spam algorithm in June of 2013 targeting sites grossly violating webmaster guidelines with egregious unnatural link building.

Matt Cutts even acknowledged one target – ‘Citadel Insurance’ which built 28,000 links from 1,000 low ranking domains within a single day, June 14th, and managed to reach position #2 for car insurance with the tactic.

By the end of June sites were finding it much harder to exploit the system with similar tactics.

@screamingfrog very natural…. @mattcutts

— Jamie Knop (@JamieKnop) June 21, 2013

@JamieKnop @screamingfrog yup, we saw that. Multi-week rollout going on now, from next week all all the way to the week after July 4th.

— Matt Cutts (@mattcutts) June 22, 2013

2013 June 11 – Payday Loans

This update impacted 0.3% of queries in the U.S., and as much as 4% of queries in Turkey.

This algorithm targets queries that have abnormally high incidents of SEO spam (payday loans, adult searches, drugs, pharmaceuticals) and applies an extra filters to these types of queries specifically.

We just started a new ranking update today for some spammy queries. See 2:30-3:10 of this video: https://t.co/KOMozoc3lo #smx

— Matt Cutts (@mattcutts) June 11, 2013

2013 May 22 – Penguin 2.0

Penguin 2.0 was an update to the Penguin algorithm (as opposed to just a data refresh), it impacted 2.3% of english queries.

What changed?

- Advertorials will no longer flow pagerank

- Niches that are traditionally spammy will see more impact

- Improvements to how hacked sites are detected

- Link spammers will see links from their domains transfer less value.

One of the biggest shifts with Penguin 2.0 is it also analyzed linkspam for internal site pages, whereas Penguin 1.0 had looked at spammy links specifically pointing to domain home pages.

This marked the first time in 6 months that the Penguin algorithm had been updated, and the 4th update to Penguin that we’ve seen:

- April 24, 2012 – Penguin 1.0 Launched

- May 25, 2012 – Penguin 1.1 Data Refresh

- October 5, 2012 – Another Penguin Data Refresh

Additional Reading:

2013 May – Domain Diversity

This update reduced the amount of times a user saw the same domain in the search results. According to Matt Cutts, once you’ve seen a cluster of +/- 4 results from the same domain, the subsequent search pages are going to be significantly less likely to show you results from that domain.

Additional Reading:

2013 May 8th – Phantom I

On May 8th, 2013 SEOs over at Webmaster World noticed intense fluctuation in the SERPs.

Lots of people dove into the data – some commenting that sites who had taken a dive were previously hit by Panda, but there were no conclusive takeaways. With no confirmation of major changes from Google, and nothing conclusive in the data – this anomaly came to be known as the “Phantom” update.

2013 March 14-15 – Panda Update 25

This is the 25th update for Panda, the algorithm that devalues low quality content in the SERPs. Matt Cutts confirmed that moving forward the Panda algorithm was going to be part of a regular algorithm updates, meaning it will be a rolling update instead of a pushed update process.

2013 January 22 – Panda Update 24

The 24th Panda update was announced on January 22, 2013 and impacted 1.2% of English search queries.

New Panda data refresh rolling out today: 1.2% of English queries affected. Background: https://t.co/EMBEJqGZ

— Google (@Google) January 22, 2013

2012 Google Algorithm Updates

2012 December 21 – Panda Update 23

The 23rd Panda update hit on December 21, 2012 and impacted 1.3% of English search queries.

2012 December 4 – Knowledge Graph Expansion

On December 4, 2012 Google announced a foriegn language expansion of the Knowledge Graph, their project to “map out real-world things as diverse as movies, bridgets and planets.”

2012 November – Panda Updates 21 & 22

In November 2012 Panda had two updates in the same month – one on November 5, 2012 (1.1% of English queries impacted in the US) and one on November 22, 2012 (0.8% of Enlish queries impacted in the US).

2012 October 9 – Page Layout Update

On October 9, 2012 Google rolled up an update to their Page Layout filter (also known as “Top Heavy”) impacting 0.7% of English-language search queries. This update rolled the Page Layout algorithm out globally.

Sites that made fixes after Google’s initial Page Layout Filter hit back in January of 2012 saw their rankings recover in the SERPs.

2012 October – Penguin Update 1.2

This was just a data refresh affecting 0.3% of English queries in the US.

Weather report: Penguin data refresh coming today. 0.3% of English queries noticeably affected. Details: https://t.co/Esbi2ilX

— Matt Cutts (@mattcutts) October 5, 2012

2012 September – Panda Updates 19 & 20

Panda update 19 hit on September 18, 2012 affecting 0.7% of English search queries, followed just over a week later by Panda update 20 which hit on September 27, 2012 affecting 2.4% of English search queries.

Panda update 20 was an actual algorithm refresh, accounting for the higher percentage of affected queries.

2012 September – Exact Match Domains

At the end of September Matt Cutts announced an upcoming change: low quality exact match domains were going to be taking a hit in the search results.

Up until this point, exact match domains had been weighted heavily enough in the algorithms to counterbalance low quality site content.

Additional Reading:

2012 August 19 – Panda Update 18

Panda version 3.9.1 rolled out on Monday, August 19th, 2012, affecting less than 1% of English search queries in the US.

This update was a data refresh.

Panda data refresh this past Monday. ~1% of queries noticeably affected. More context: https://t.co/nSjVRWGb

— Google (@Google) August 22, 2012

2012 August – Fewer Results on Page 1

In August Google began displaying 7 results for about 18% of the queries, rather than the standard 10.

Upon further inspection it appeared that google had reduced the number of organic results so they’d have more space to test a suite of potential top-of search features including: expanded site links, images, and local results.

This change, in conjunction with the knowledge graph, paved the way for the top-of-search rich snippet results we see in search today.

Additional Reading:

2012 August 10 – Pirate/DMCA Penalty

Google announces they’ll be devaluing sites that repeatedly get accused of copyright infringement in the SERPs. As of this date the number of valid copyright removal notices is a ranking signal in Google’s search algorithm.

Additional Reading:

2012 July 24 – Panda Update 17

On July 24, 2012 Google Announces Panda 3.9.0 – a refresh for the algorithm affecting less than 1% search

2012 July 27 – Webmaster Tool Link Warnings

Not technically an algorithm update, but it definitely affected the SEO landscape.

On July 27, 2012 Google posted an update clarifying topics surrounding a slew of unnatural link warnings that had recently been sent out to webmasters:

- Unnatural link warnings and drops in rankings are directly connected

- Google doesn’t penalize sites as much when they’re the victims of 3rd party bad actors

Additional Reading:

2012 June – Panda Updates 15 & 16

In June Google made two updates to its Panda algorithm fighting low quality content in the SERPs:

- Panda 3.7 rolled out on June 8, 2012 affecting less than 1% of English search queries in the U.S.

- Panda 3.8 rolled out on June 25, 2012 affecting less than 1% of queries worldwide.

Both updates were data refreshes.

2012 June – 39 Google Updates

On June 7, 2012 Google posted an update providing insight into search changes made over the course of May. Highlights included:

- Link Spam Improvements:

- Better hacked sites detection

- Better detection of inorganic backlink signals

- Adjustments to Penguin

- Adjustments to how Google handles page titles

- Improvements to autocomplete for searches

- Improvements to the freshness algorithm

- Improvements to rankings for news and recognition of major news events.

Additional Reading:

2012 May 25 – Penguin 1.1

A data refresh for the Penguin algorithm was released on May 25, 2012 affecting less than 0.1% of search queries.

Additional Reading:

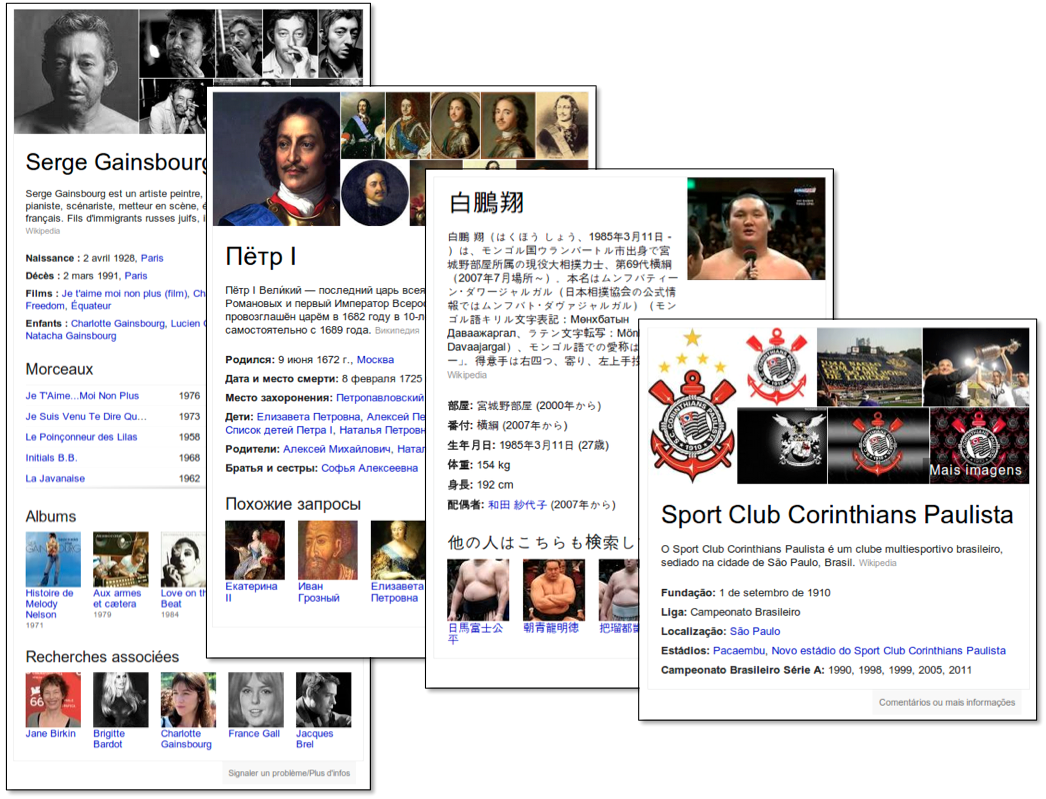

2012 My 16 – Knowledge Graph

On May 16, 2012 Google introduced the knowledge graph, a huge step forward in helping users complete their goals faster.

First, the knowledge graph improved Google’s understanding of entities in Search (what words represented — people, places, or things).

Second, it surfaced relevant information about these entities directly on the search results page as summaries and answers. This meant that users in many instances, no longer needed to click into a search result to find the information they were seeking.

Additional Resources:

2012 May 4 – 52 April Updates

On May 4, 2012 Google posted an update providing insight into search changes made over the course of April. Highlights included:

- 15% increase in the base index

- Removed the freshness boost for low quality content

- Increased domain diversity in the search results.

- Changes to Sitelinks

- Sub sitelinks

- Better ranking of expanded sitelinks

- Sitelinks data refresh

- Adjustment to surface more authoritative results.

Additional Reading:

2012 April – Panda Updates 13 & 14

In April Google made two updates to its Panda algorithm fighting low quality content in the SERPs:

- Panda 3.5 rolled out on April 19, 2012

- Panda 3.6 rolled out on April 27, 2012 affecting 1% of queries.

Panda 3.5 seemed to target press portals and aggregators, as well as heavily-templated websites. This makes sense as these types of sites are likely to have a high number of pages with thin or duplicative content.

Additional Reading:

2012 April 24 – Penguin

The Penguin Algorithm was announced on April 24, 2012 and focused specifically on devaluing sites that engage in spammy SEO practices.

The two primary targets of Penguin 1.0? Keyword stuffing and link schemes.

Additional Reading:

2012 April 24 – Penguin

The Penguin Algorithm was announced on April 24, 2012 and focused specifically on devaluing sites that engage in spammy SEO practices.

The two primary targets of Penguin 1.0? Keyword stuffing and link schemes.

Additional Reading:

2012 April – Parked Domain Bug

After a number of webmasters reported ranking shuffles, Google confirmed that a data error had caused some domains to be mistakenly treated as parked domains (and thereby devalued). This was not an intentional algorithm change.

Additional Reading:

2012 April 3 – 50 Updates

On April 3, 2012 Google posted an update providing insight into search changes made over the course of March. Highlights included:

- Sitelinks Data Refresh

- Better handling of queries with navigational and local intent

- Improvements to detecting site quality

- Improvements to how anchor text contributes to relevancy for sites and search queries

- Improvements to how search handles synonyms

Additional Reading:

2012 March – Panda Update 12

On March 23, 2012 we saw the Penguin 3.4 update, a data refresh affecting 1.6% of queries.

Panda refresh rolling out now. Only ~1.6% of queries noticeably affected. Background on Panda: https://t.co/Z7dDS6qc

— Google (@Google) March 23, 2012

2012 February 27 – Panda Update 11

Panda Update 3.3 was a data refresh that was announced on February 27, 2012.

2012 February 27 – Series of Updates

On February 27, 2012 Google posted an update providing insight into search changes made over the course of February. Highlights included:

- Travel related search improvements

- international launch of shopping rich snippets

- improved health searches

- Google changed how it was evaluating links, dropping a method of link analysis that had been used for the past several years.

Additional Reading:

2012 February – Venice

The Venice update changed the face of local search forever, as local sites now up even without a geo modifier being used in the keyword itself.

Additional Reading:

2012 January – Page Layout Update

This update devalued pages in search that had too many ads “above-the-fold.” Google said that ads that prevented users from accessing content quickly provided a poor user experience.

Additional Reading:

2012 January 10 – Personalized Search

On January 10, 2012 Google announced Search, plus Your World. Google had already expanded search to include content personally relevant to individuals with Social Search, Your World was the next step.

This update pulled in information from Google+ such as photos, profiles, and more.

Additional Reading:

2012 January 5 – 30 Google Updates

On January 5, 2012 Google posted an update providing insight into search changes made over the course of December of 2011. Highlights included:

- Landing page quality became a signal for image search, beyond the image itself

- Soft 404 detection (when a page returns a different status code, but the content still wont be accessible to a user).

- More rich snippets

- Better infrastructure for autocomplete (ex: spelling corrections)

- More accurate byline dates

- Related queries improvements

- Upcoming events at venues

- Faster mobile browsing – skipped the redirect phase of sending users to a mobile site m.domain.com

Additional Reading:

2011 Google Algorithm Updates

2011 December 1 – 10 Google Updates

On December 1, 2011 Google posted an update providing insight into search changes made the two weeks prior. Highlights included:

- Refinements to the inclusion of related queries so they’d be more relevant

- Expansion of indexing to include more long tail keywords

- New parked domain classifier (placeholder sites hosting ads)

- More complete (fresher) blog results

- Improvements for recognizing and rewarding whichever sites originally posted content

- Top result selection code rewrite to avoid “host crowding” (too many results from a single domain in the search results).

- New verbatim tool

- New google bar

Additional Reading:

2011 November 18 – Panda Update 10

The Panda 3.1 update rolled out on November 18th, 2011 and affected less than 1% of searches.

2011 November – Panda 3.1 (Update 9)

On November 18th, 2011 Panda Update 3.1 goes live, impacting <1% of searches.

2011 November – Automatic Translation & More

On November 14, 2011 Google posted an update providing insight into search changes made over the couple preceding weeks. Highlights included:

- Cross language results + automatic translation

- Better page titles in search results by de-duplicating boilerplate anchors (referring to google-generated page titles, when they ignore html title tags because they can provide a better one)

- Extending application rich snippets

- Refining official page detection, adjusted how they determine which pages are official

- Improvements to date-restricted queries

Additional Reading:

2011 November 3 – Fresher Results

Google puts an emphasis on more recent results, especially on time-sensitive queries.

- Ex: Recent events / hot topics

- Ex: regularly occurring/recurring events

- Frequently updated/outdated types of info (ex: best SLR camera)

Additional Reading:

2011 October – Query Encryption

On October 18, 2011 Google announced that they were going to be encrypting search data for users who are signed in.

The result? Webmasters could tell that users were coming from google search, but could no longer see the queries being used. Instead, webmasters began to see “(not provided)” showing up in their search results.

This change followed a January roll out of SSL encryption protocol to gmail users.

Additional Reading:

2011 October 19 – Panda Update 8 (“Flux”)

In October Matt Cutts announced there would be upcoming flux from the Panda 3.0 update affecting about 2% of search queries. Flux occurred throughout October as new signals were incorporated into the Panda algorithms and data is refreshed.

Additional Reading:

2011 September 28 – Panda Update 7

On September 20, 2011 Google released their 7th update to the Panda algorithm – Panda 2.5.

2011 September – Pagination Elements

Google added pagination elements – link attributes to help with pagination crawl/indexing issues.

- Rel=”Next”

- Rel=”prev”

Note: this is no longer an indexing signal anymore

2011 August 16 – Expanded Site Links

On August 16, 2011 Google announced expanded display of sitelinks from a max of 8 links to a max of 12 links.

Additional Reading:

2011 August 12 – Panda Update 6

Google rolled out Panda 2.4 expanding Panda to more languages August 12, 2011, impacting 6-9% of queries worldwide.

Additional Reading:

2011 July 23 – Panda Update 5

Google rolled out Panda 2.3 in July of 2011, adding new signals to help differentiate between higher and lower quality sites.

2011 June 28 – Google+

On June 28, 2011 Google launched their own social network, Google+. The network was sort of a middle ground between Linkedin and Facebook.

Over time, Google + shares and +1s (likes) will eventually become a temporary personalized search ranking factor.

Ultimately though, Google+ ended up being decommissioned in 2019

Additional Reading:

2011 June 16 – Panda Update 4

According to Matt Cutts Panda 2.2 improved scraper-site detection.

What’s a scraper? In this context, a scraper is software used to copy content from a website, often to be posted to another website for ranking purposes. This is considered a type of webspam (not to mention plagiarism).

This update rolled out around June 16, 2011.

2011 June 2 – Schema.org

On June 2, 2011 Google, Yahoo, and Microsoft announced a collaboration to create “a common vocabulary for structured data,” known as Schema.org.

Additional Reading:

2011 May 9 – Panda Update 3

Panda 2.1 rolled out in early May, and was relatively minor compared to previous Panda updates.

2011 April 11 – Panda Update 2

On April 11, 2011 Panda 2.0 rolled out globally to English users, impacting about 2% of search queries.

What was different in Panda 2.0?

- Better assessment of site quality for long-tailed keywords

- This update also begins to incorporate data around sites that user’s manually block

Additional Reading:

2011 March 28 – Google +1 Button

Google introduces the +1 Button, similar to facebook “like” button or the reddit upvote. The goal? Bring trusted content to the top of the search results.

Later in June Google posted a brief update that they made the button faster, and in August of 2011 it also became a share icon.

Additional Reading:

2011 February – Panda Update (AKA Farmer)

Panda was released to fight thin content and low-quality content in the SERPs. Panda was also designed to reward unique content that provides value to users.

As a result sites with less intrusive ads started to do better in the search results, sites with”thin” user-generated content went down, as did harder to read pages.

Per Google:

As “pure webspam” has decreased over time, attention has shifted instead to “content farms,” which are sites with shallow or low-quality content.”

Additional Reading

2011 January – Attribution Update

This update focused on stopping scraper sites from receiving benefit from stolen content. The algorithm worked to establish which site initially created and posted content, and boost that site in the SERPs over other sites which had stolen the content.

Additional Reading:

2011 January – Overstock.com & JCPenney Penalty

Overstock and J.C. Penney receive manual actions due to deceptive link building practices.

Overstock offered a 10% discount to universities, students, and parents — as long as they posted anchor-text rich content to their university website. A competitor noticed the trend and reported them to Google.

JC Penney had thousands of backlinks built to its site targeting exact match anchor text. After receiving a manual action they disavowed the spammy links and largely recovered.

Additional Reading:

2010 Google Algorithm Updates

2010 December – Social Signals Incorporated

Google confirms that they use social signals including accounting for shares when looking at news stories, and author quality.

<h3style=”font-size: 18pt;”>2010 December – Negative ReviewsIn late November a story broke about how businesses were soaring in the search results, and seeing their businesses grow exponentially – by being as terrible to customers as possible.

Enraged customers were leaving negative reviews on every major site they could linking back to these bad-actor businesses, trying to warn others. But what was happening in search, is all those backlinks were giving the bad actors more and more search equity — enabling them to show up as the first result for a wider and wider range of searches.

Google responded to the issue within weeks, making changes to ensure businesses could not abuse their users in that manner moving forward.

Per Google:

“Being bad is […] bad for business in Google’s search results.”

Additional Reading:

NYT – Bullies Rewarded in Search

2010 November – Instant Visual Previews

This temporary feature allowed users to see a visual preview of a website in the search results. It was quickly rolled back.

Additional Resources:

Google Blog – Beyond Instant Results, Instant Previews

2010 September – Google Instant

Google suggest starts displaying results before a user actually completes their query.

This feature lived for a long time (in tech-years anyways) but was sunset in 2017 as mobile search became dominant, and Google realized it might not be the optimal experience for on-the-go mobile users.

2010 August – Brand Update

Google made a change to allow some brands/domains to appear multiple times on page one depending on the search

This feature ends up undergoing a number of updates over time as Google works to get the right balance of site diversity when encountering host-clusters (multiple results from the same domain in search).

2010 June – Caffeine Roll Out

On June 10, 2010 Google announced Caffeine.

Caffeine was an entirely new indexing system with a new search index. Where before there had been multiple indexes, each being updated and refreshed at their own rates, caffeine enabled continuous updating of small portions of the search index. Under caffeine, newly indexed content was available within seconds of being crawled

Per Google:

“Caffeine provides 50 percent fresher results for web searches than our last index, and it’s the largest collection of web content we’ve offered. Whether it’s a news story, a blog or a forum post, you can now find links to relevant content much sooner after it is published than was possible ever before.”

Additional Reading:

2010 May 3 – MayDay

The May Day update occurred between April 28th and May 3rd 2010. This update was a precursor to Panda and took a shot at combating content farms.

Google’s comment on the update? “If you’re impacted, assess your site for quality.”

Additional Resources:

2010 April – Google Places

In April of 2010 Local Business Center became Google Places. Along with this change came the introduction of service areas (as opposed to just a single address as a location).

Other highlights:

- Simpler method for advertising

- Google offered free professional photo shoots for businesses

- Google announced another batch of favorite places

By April of 2010, 20% of searches were already location-based.

Additional Reading:

2009 Google Algorithm Updates

2009 December – Real Time Search

Google announces search features related to newly indexed content: Twitter Feeds, News Results, etc. This real time feed was nested under a “latest results” section of the first page of search results.

2009 August 10 – Caffeine Preview

On August 10 Google begins to preview Caffeine, requesting feedback from users.

Additional Reading:

2009 February – Vince

Essentially the Vince update boosted brands.

Vince focused on trust, authority and reputation as signals to provide higher quality results which could push big brands further to the top of the SERPs.

Additional Resources:

Watch – Is Google putting more weight on brands in rankings?

Read – SEO Book – Google Branding

2008 Google Search Updates

2008 August – Google Suggest

Google introduces “suggest” which displays suggested search terms as the user is typing their query.

Additional Reading:

2008 April – Dewey

The Dewey update rolled out in late March/early April. The update was called Dewey because Matt Cutts chose the (slightly unique) term as one that would allow comparison between results from different data centers.

2007 Google Algorithm Updates

2007 June – Buffy

The Buffy update caused fluctuations for single-word search results.

Why Buffy?Google Webmaster Central product manager and long-time head of operations, Vanessa Fox, notoriously an avid Buffy fan, announced she was leaving Google.

Vanessa garnered an intense respect from webmasters over her tenure both for her product leadership and for her responsiveness to the community – the people using google’s products daily. The webmaster community named this update after her interest as a sign of respect.

Additional Reading:

2007 May – Universal Search

Old school organic search results are integrated with video, local, image, news, blog, and book searches.

Additional Reading:

2006 Google Search Updates

2006 November – Supplemental Update

An update to how the filtering of pages stored in the supplemental index is handled. Google went on to scrap the supplemental index label in July 2007.

Additional Reading:

2005 Google Search Updates

2005 November – Big Daddy

This was an update to the Google search infrastructure and took 3 months to roll out: January, February, and March. This update also changed how google handled canonicalization and redirects.

Additional Reading:

2005 October 16 – Jagger Rollout Begins

The Jagger Update rolled out as a series of October updates.

The update targeted low quality links, reciprocal links, paid links, and link farms. The update helped prepare the way for the Big Daddy infrastructure update in November.

Additional Reading:

2005 October – Google Local / Maps

In October of 2015, Google merged Local Business Center data merges with Maps data.

2005 September – Gilligan / False Alarm

A number of SEOs noted fluctuations in September which they originally named “Gilligan.” It turns out there were no algorithm updates, just a data refresh (index update).

Given the news, many SEOs renamed their posts “False Alarm.” However, moving forward many data refreshes are considered updates by the community. So we’ll let the “Gilligan” update stand.

Additional Reading:

2005 June – Personalized Search

Google relaunches personal search. This time it helps shape future results based on your past selections.

Additional Reading:

2005 June – XML sitemaps

Google launches the ability to submit XML sitemaps via Google Webmaster tools. This update bypassed old HTML sitemaps. It gave Webmasters some influence over indexation and crawling, allowing them to feed pages to the index with this feature.

Additional Reading:

2005 May – Bourbon

The May 2005 update, nicknamed Bourbon seemed to devalue sites/pages with duplicate content, and affected 3.5% of search queries.

2005 February – Allegra

The Allegra update rolled out between February 2, 2005 and February 8, 2005. It caused major fluctuations in the SERPs. While nothing has ever been confirmed, these are the most popular theories amongst SEOs for what changed:

- LSI being used as a ranking signal

- Duplicate content is devalued

- Suspicious links are somehow accounted for

Additional Reading:

2005 January – NoFollow

In early January, 2005 Google introduced the “Nofollow” link attribute to combat spam, and control the outbound link quality. This change helped clean up spammy blog comments: comments mass posted to blogs across the internet with links meant to boost the rankings of the target site.

Future Changes:

- On June 15, 2009 Google changed the way it views NoFollow links in response to webmasters manipulating pages with “page rank sculpting”.

- Google suggests webmasters use “nofollow” attributes for ads and paid links.

- On September 10, 2019 Google Announced two additional link attributes “sponsored” and “ugc.”

- Sponsored is for links that are paid or advertorial.

- UGC is for links which come from user generated content.

Additional Reading:

2004 Google Algorithm Updates

2004 February – Brandy

The Brandy update rolled out the first half of February and included five significant changes to Google’s algorithmic formulas (confirmed by Sergey Brin).

Over this same time period Google’s index was significantly expanded, by over 20%, and dynamic web pages were included in the index.

What else changed?

- Google began shifting importance away from Page Rank to link quality, link anchors, and link context.

- Attention is being given to link neighborhoods – how well your site connected to others in your sector or space. This meant that outbound links became more important to a site’s overall SEO.

- Latent Semantic Indexing increases in importance. Tags (titles, metas, H1/H2) took a back seat to LSI.

- Keyword analysis gets a lot better. Google gets better at recognizing synonyms using LSI.

2004 January – Austin

Austin followed up on Florida continuing to clean up spammy SEO practices, and push unworthy sites out of the first pages of search results.

What changed?

- Invisible text took another hit

- Meta-tag stuffing was a target

- FFA (Free for all) link farms no longer provided benefit

Many SEOs also speculated that this had been a change to Hilltop, a page rank algorithm that had been around since 1998.

Additional Reading:

2003 Google Algorithm Updates

2003 November 16 – Florida

Google’s Florida update rolled out on November 16, 2003 and targeted spammy seo practices such as keyword stuffing. Many sites that were trying to game the search engine algorithms instead of serve users also fell in the rankings.

2003 September – Supplemental Index

Google split their index into main and supplemental. The goal was to increase the number of pages/content that Google could crawl and index. The supplemental index had less restrictions on indexing pages. Pages from the supplemental index would only be shown if there were very few good results from the main index to display for a search.

When the supplemental index was introduced some people viewed being relegated to the supplemental index as a penalty or search results “purgatory”.

Google retired the supplemental index tag in 2007, but has never said that they retired the supplemental index itself. That being said it’s open knowledge that Google maintains multiple indices, so it is within the realm of reason that the supplemental index may still be one of them. While the label dissapeared, many wonder if the supplemental index has continued to exist and morphed into what we see today as “omitted results”Sites found they were able to move from the supplemental index to the main index by acquiring more backlinks.

Additional Reading:

2003 July – Fritz (Everflux)

In July, 2003 Google moved away from monthly index updates (often referred to as the google dance) to daily updates in which a portion of the index was updated daily. These regular updates came to be referred to as “everflux.”

2003 June – Esmerelda

Esmerelda was the last giant monthly index update before Google switched over to daily index updates.

2003 May – Dominic

Google’s Dominic update focused on battling spammy link practices.

2003 April – Cassandra

Google’s Cassandra update launched in April of 2003 and targeted spammy SEO practices including hidden text, heavily co-linked domains, and other low-link-quality practices.

Google began allowing banned sites to submit a reconsideration request after manual penalties in April of 2003.

Additional Reading:

2003 February – Boston

Google’s first named update was Boston which rolled out in February of 2003. The Google Boston Update improved algorithms related to analyzing a site’s backlink data.

2002 Google Algorithm Updates

2002 September – 1st Documented Update

Google’s first documented search algorithm update happened on September 1, 2002. It was also the kickoff of “Google Dance” – large-scale monthly refreshes of Google’s search index.

SEOs were shocked by the update claiming “PageRank [is] DEAD”, this update was a little imperfect and included issues such as 404 pages showing up on the first page of search.

Additional Reading:

2000 Google Search Updates

2000 December – Google Toolbar

Google launches their search toolbar for browsers. The toolbar highlights search terms within webpage copy, and allowed users to search within websites that didn’t have their own site search.

Additional Reading: