[vc_row full_width=”stretch_row” css=”.vc_custom_1571406556143{padding-top: 50px !important;padding-bottom: 50px !important;background-image: url(https://www.linkgraph.com/wp-content/uploads/2017/02/bg-pattern.jpg?id=206) !important;}”][vc_column][vc_column_text] Robots.txt Tester [/vc_column_text][/vc_column][/vc_row][vc_row css=”.vc_custom_1540391403209{padding-top: 50px !important;padding-right: 30px !important;padding-bottom: 10px !important;padding-left: 30px !important;}”][vc_column css=”.vc_custom_1540391093223{margin-top: 0px !important;margin-right: 0px !important;margin-bottom: 0px […]

[vc_row full_width=”stretch_row” css=”.vc_custom_1571406556143{padding-top: 50px !important;padding-bottom: 50px !important;background-image: url(https://www.linkgraph.com/wp-content/uploads/2017/02/bg-pattern.jpg?id=206) !important;}”][vc_column][vc_column_text]

Robots.txt Tester

[/vc_column_text][/vc_column][/vc_row][vc_row css=”.vc_custom_1540391403209{padding-top: 50px !important;padding-right: 30px !important;padding-bottom: 10px !important;padding-left: 30px !important;}”][vc_column css=”.vc_custom_1540391093223{margin-top: 0px !important;margin-right: 0px !important;margin-bottom: 0px !important;margin-left: 0px !important;padding-top: 0px !important;padding-right: 0px !important;padding-bottom: 0px !important;padding-left: 0px !important;}”][vc_raw_html css=”.vc_custom_1571406761835{margin-top: 0px !important;margin-right: 0px !important;margin-bottom: 0px !important;margin-left: 0px !important;padding-top: 0px !important;padding-right: 0px !important;padding-bottom: 0px !important;padding-left: 0px !important;}”]JTNDaWZyYW1lJTIwc3JjJTNEJTIyaHR0cHMlM0ElMkYlMkZwdWJsaWMubGlua2xhYm9yYXRvcnkuY29tJTJGcm9ib3RzLXZhbGlkYXRvciUyRiUyMiUyMHdpZHRoJTNEJTIyMTAwJTI1JTIyJTIwaGVpZ2h0JTNEJTIyNTAwJTIyJTIwcG9zaXRpb24lM0QlMjJyZWxhdGl2ZSUyMiUyMGZyYW1lYm9yZGVyJTNEJTIyMCUyMiUyMHNjcm9sbGluZyUzRCUyMnllcyUyMiUzRUJyb3dzZXIlMjBub3QlMjBjb21wYXRpYmxlLiUyMCUzQyUyRmlmcmFtZSUzRQ==[/vc_raw_html][/vc_column][/vc_row][vc_row css=”.vc_custom_1571343850078{padding-top: 20px !important;padding-bottom: 40px !important;}”][vc_column][vc_toggle title=”What is a Robots.txt file?”]The robots exclusion standard, also known as the robots exclusion protocol, is a standard used by websites to communicate with web robots. This protocol is often referred to simply as a robts.txt file.

A web robot may be called other names like spider, crawler, or wanderer. These robots have many potential uses. Typically search engines use crawlers, such as googlebot or bingbot, to discover and index web content. When a search engine indexes a web page, it is adding that webpage to the pool of potential results it can serve to users.[/vc_toggle][vc_toggle title=”Why would I want to block a robot or crawler?”]Many reasons. You may want to make your development team’s staging or testing site invisible to search engines, you may want to avoid having duplicate content show up in search, you may want to avoid having your site overloaded by bot requests.

If you have a site that includes contact information for staff, you may want to avoid those pages being crawled and the information indexed by spammers. Remember that web robots can be created by anyone, not just search engines. A scammer could use a bot to scan for email addresses or other identifiable information on a website. Site owners who wish to control which crawlers access their web pages can limit bots across the entire site or limit bots on specific pages using robots.txt and .htaccess files.

Robot.txt files are domain files (website.com/robots.txt) that will specify instructions to crawlers that “allow” or “disallow” them to scan your pages. They may also be complemented by an xml sitemap which alerts search engines to sites and webpages available for crawling.

Robot.txt files are not owned by any body of standards and can be used freely by anyone. While there are some industry efforts to expand exclusion mechanisms, there is no official body of technical standards working to further develop robotstxt files.[/vc_toggle][vc_toggle title=”How would I create a Robots.txt file manually?”]You may want to create your own files for more specific purposes. In this case, you’ll need to know where to put the file as well as what sorts of things to put in it. Robots.txt files will generally need to go in the top-level directory of your web server. When engine bots are looking for a robots.txt file for a URL, it will remove the path component of the URL and replace it with the robot file once it’s found. This makes it so that, instead of scanning your web page as the crawler normally would, it will first have to consider each directive in your file.

For example, if you wanted to disallow spiders from crawling your page, you could create a file specifying the following:

User-agent: *

Disallow: /

In this example, the asterisk is addressing all programs, and the forward slash represents your entire site. After seeing this file, all compliant programs will refrain from crawling your site. Of course, files can get more complex to disallow specific programs, grant full access, or grant limited access to certain webpages.

If you feel your servers are in danger of being overloaded by bots, it’s even possible to create files for things like a crawl-delay directive to control how quickly web robots analyze your content. Left to their own devices, bots will generally crawl as quickly as possible to maintain crawl budget, or the amount of pages they crawl in a given time frame.

Robot files can be created in anything that produces a plain text file. This makes notepad a good choice, or you can save as plain text in word processors. Other formats, such as pdf, should be avoided. It’s important to know that these files are case sensitive and must be saved exactly as “robots.txt.” You’ll also need to know basic syntax, or “language,” of the files. The most common terms you’ll see are the following:

- User-agent: This specifies the bot(s) you’re giving instructions to. These will typically be search engine bots, but there may be exceptions.

- Allow: This applies only to googlebot, and it essentially tells the bot that it can access specific areas of a webpage or a subfolder even if the parent page or parent folder is disallowed.

- Disallow: This tells bots not to crawl the specified URL.

Sitemap: This tells bots about the location of any sitemap associated with the URL, though the function is supported only by Google, Bing, Ask, and Yahoo. - Crawl-delay: This specifies the amount of time bots should wait before loading and crawling through page content.

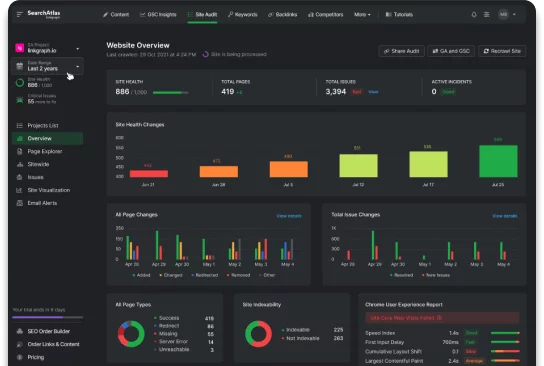

Certain search engines also support a couple of wildcard expressions that can identify multiple pages or folders for exclusion from crawlers. The two most common are the asterisk and dollar sign.[/vc_toggle][vc_toggle title=”Is there a tool to help me create a Robots.txt file?”]A Robots.txt generator will allow you to select specific URLs you want to prevent from being crawled by programs and specific bots you want to allow or disallow.

Once you’re done selecting URLs and bots, click the “Generate Robots.txt” button to receive a ready-made file to use on your domain. Alternatively, click the “Download Robots.txt” button to receive a file in text form.[/vc_toggle][vc_toggle title=”How do I test my Robots.txt file is set up correctly?”]Robot file testing tools are important for you to see whether your files block the access of certain search engine crawlers to your site. If you’re ever in doubt about whether content you want indexed and ranked can be accessed by crawlers, a robots.txt testing tool is an easy to use ticket to peace of mind.

They can also point out any logic errors or syntax inconsistencies in your files. Limitations of a robots.txt tester include the fact that they will only test for certain bots (sometimes only Google’s bots), and changes made to your files in the tool will not automatically carry over to your site. The webmaster will still need to update the files manually.

To test your robots.txt file, simply use the tool at the top of this page.[/vc_toggle][vc_toggle title=”What are best practices for Robots.txt files?”]

Robots.txt files are great for certain situations, but it’s very important for your seo efforts that you don’t accidentally do something like prevent googlebot or bingbot from crawling your site altogether. If search engine bots can’t crawl your site, then you can’t rank. Some of the best uses of a robot file include preventing duplicate content from appearing in search engine result pages (SERPs), keeping sections of your site private, specifying delays, and keeping internal search results from showing on public serps.

For SEO purposes, you’ll need to remember that any pages blocked by a robot file will not be followed by search engines, which can prevent them from being indexed. This also prevents any link equity being shared from or to the blocked page. Robot files also shouldn’t be used to prevent sensitive information from showing in search results. Because other pages may link directly to the page containing sensitive information (such as login information), this information may still be indexed. To prevent this, it’s best to use other methods like password protocols or a noindex directive.

Some search engines also use multiple bots. For example, Google uses googlebot for organic searches, but it also uses google-image for image search, so it’s actually possible to use different robot files to control how your content is crawled. For any webmaster particularly concerned about privacy from the more traditional search engines like Google and Bing, there are some alternative services, such as yandex.

[/vc_toggle][vc_toggle title=”What are the limitations of Robots.txt files?”]Before using robots.txt files, there are some important things to take into consideration. Firstly, certain programs may ignore your robots.txt files altogether. This is especially true of malware programs looking for security vulnerabilities or those used by scammers and other malicious parties. Secondly, robots.txt files are publicly available, meaning that anyone can find out which section of your site you’ve set to have a disallow directive. This makes a robots.txt file useless for hiding information.

It’s theoretically possible to block malicious bots, but in practice this can be a little bit difficult to say the least. The best way to block a malicious program requires it to actually obey a robots.txt file, which is unlikely. In this scenario, however, it would be possible to identify it and block it specifically. If you find that a malicious program is operating from one IP address, you can use a firewall to deny access. It’s possible to use advanced firewall rules as the next step to block multiple IP addresses if they are attacking as part of a network, though this can affect good bots as well as the bad.[/vc_toggle][/vc_column][/vc_row][vc_row full_width=”stretch_row” css_animation=”bottom-to-top” css=”.vc_custom_1558778842982{background-color: #010c20 !important;}”][vc_column width=”1/4″][vc_empty_space height=”50″][vc_single_image image=”1121″ img_size=”full”][vc_btn title=”Chat with Someone Smart” size=”lg” i_icon_fontawesome=”fa fa-weixin” add_icon=”true” custom_onclick=”true” drift_chat_selector=”.drift-open-chat” elements=”document.querySelectorAll(selector);” i=”0;” handleclick=”openSidebar.bind(this,” el_class=”cta-chat-start”][vc_custom_heading text=”CONTACT US” font_container=”tag:div|font_size:18px|text_align:left|color:%23ffffff” use_theme_fonts=”yes” css=”.vc_custom_1558778763100{margin-bottom: 10px !important;}”][vc_column_text]

[/vc_column_text][/vc_column][vc_column width=”1/4″][vc_empty_space height=”50″][vc_empty_space height=”35″][/vc_column][vc_column width=”1/2″][/vc_column][/vc_row]