What Is Crawl Budget & How to Optimize for It

A crawl budget may seem like a foreign concept when you’re first learning about how search engine bots work. While not the easiest SEO concept, they’re less […]

A crawl budget may seem like a foreign concept when you’re first learning about how search engine bots work. While not the easiest SEO concept, they’re less complicated than they may seem. Once you begin to understand what a crawl budget is and how search engine crawling works, you can begin to optimize your website to optimize for crawlability. This process will help your site achieve its highest potential for ranking in Google’s search results.

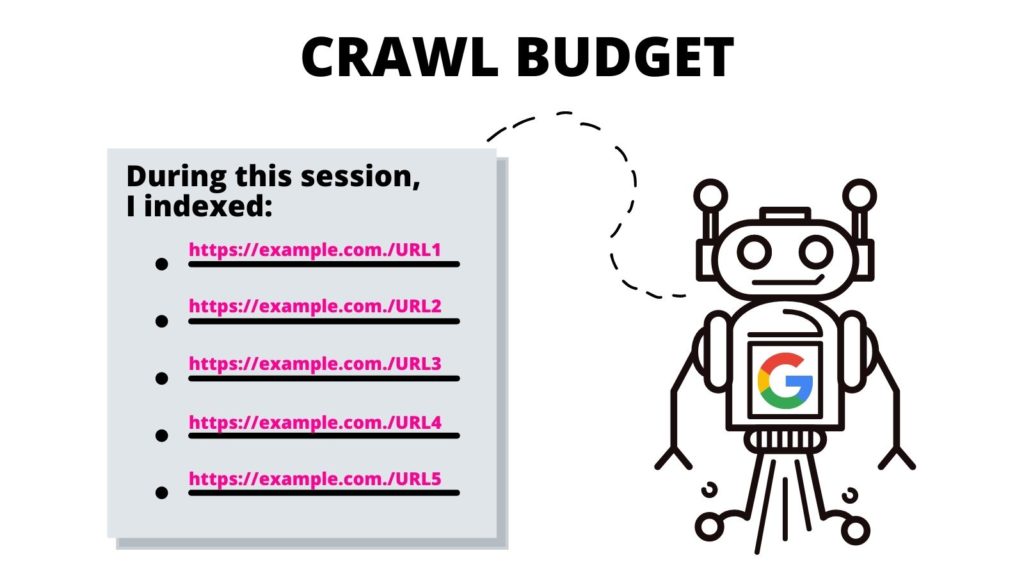

What Is a Crawl Budget?

A crawl budget is the number of URLs from one website that search engine bots can index within one indexing session. The “budget” of a crawl session differs from website to website based on each individual site’s size, traffic metrics, and page load speed.

If you’ve gotten this far and the SEO terms are unfamiliar to you use our SEO glossary to become more familiar with the definitions.

What Factors Affect a Website’s Crawl Budget?

- Popularity: The more a site or page is visited, the more often it should be analyzed for updates. Furthermore, more popular pages will accrue more inbound links more rapidly.

- Size: Large websites and pages with more data-intense elements take longer to crawl.

- Health/Issues: When a webcrawler reaches a dead-end through internal links, it takes time for it to find a new starting point–or it abandons the crawl. 404 errors, redirects, and slow loading times slow down and stymied webcrawlers.

How Does Your Crawl Budget Affect SEO?

The webcrawler indexing process makes search possible. If your content cannot be found then indexed by Google’s webcrawlers, your web pages–and website will not be discoverable by searchers. This would lead to your site missing out on a lot of search traffic.

Why Does Google Crawl Websites?

Googlebots systematically go through a website’s pages to determine what the page and overall website are about. The webcrawlers process, categorize, and organize data from that website page-by-page in order to create a cache of URLs along with their content, so Google can determine which search results should appear in response to a search query.

Additionally, Google uses this information to determine which search results best fit the search query in order to determine where each search result should appear in the hierarchical search results list.

What Happens During a Crawl?

Google allots a set amount of time for a Googlebot to process a website. Because of this limitation, the bot likely will not crawl an entire site during one crawl session. Instead, it will work its way through all the site’s pages based on the robots.txt file and other factors (such as the popularity of a page).

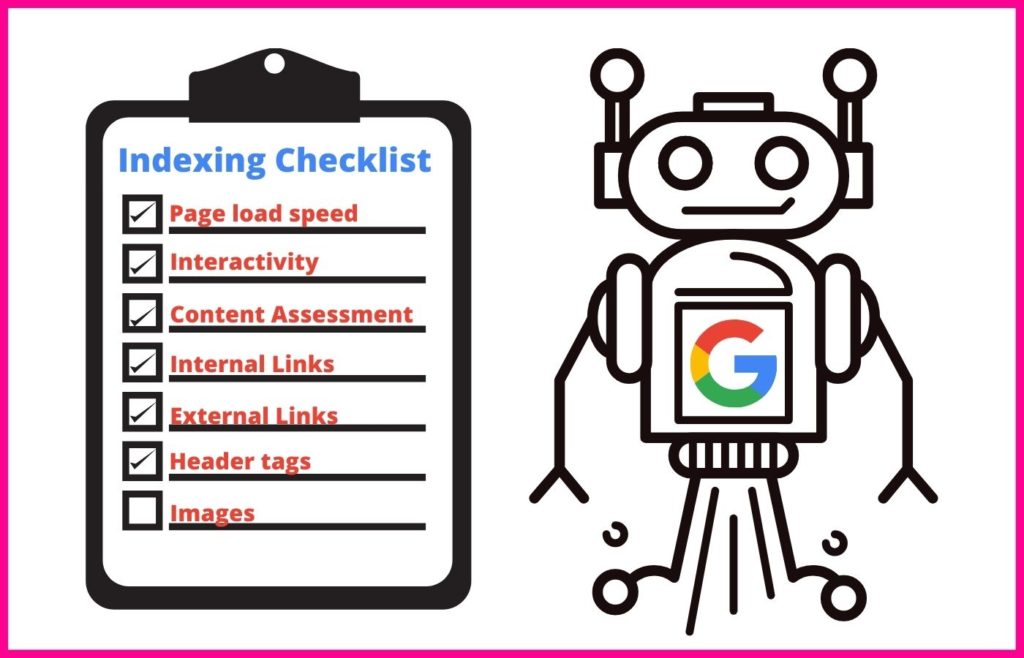

During the crawl session, a Googlebot will use a systematic approach to understanding the content of each page it processes.

This includes indexing specific attributes, such as:

- Meta tags and using NLP to determine their meaning

- Links and anchor text

- Rich media files for image searches and video searches

- Schema markup

- HTML markup

The web crawler will also run a check to determine if the content on the page is a duplicate of a canonical. If so, Google will move the URL down to a low priority crawl, so it doesn’t waste time crawling the page as often.

What are Crawl Rate and Crawl Demand?

Google’s web crawlers assign a certain amount of time to every crawl they perform. As a website owner, you have no control over this amount of time. However, you can change how quickly they crawl individual pages on your site while they’re on your site. This number is called your crawl rate.

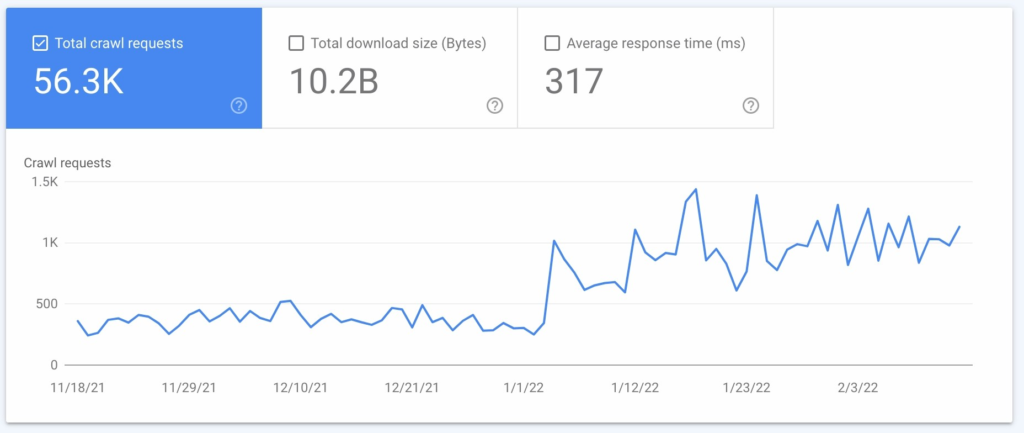

Crawl demand is how often Google crawls your site. This frequency is based on the demand of your site by internet users and how often your site’s content needs to be updated on search. You can discover how often Google crawls your site using a log file analysis (see #2 below).

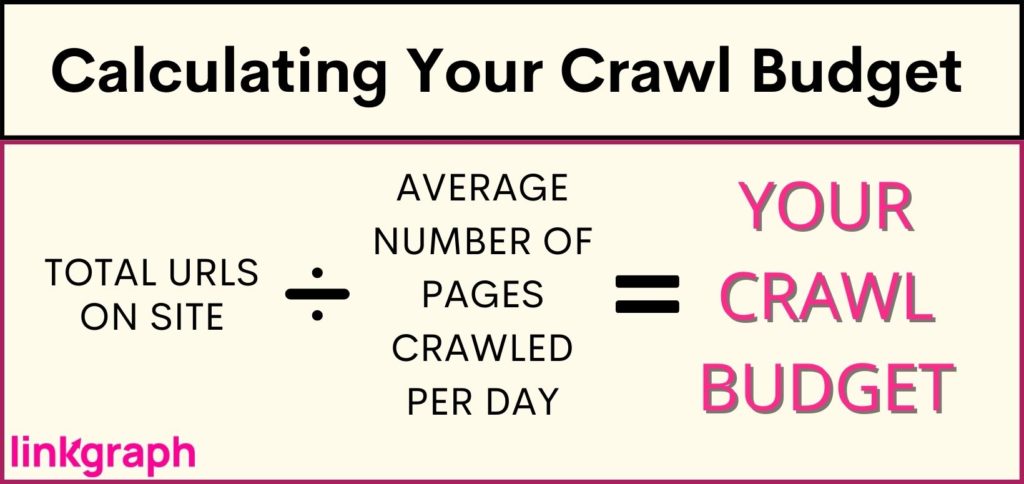

How Can I Determine My Site’s Crawl Budget?

Because Google limits the number of times they crawl your site and for how long, you want to be aware of what your crawl budget is. However, Google doesn’t provide site owners with this data–especially if your budget is so narrow that new content won’t hit the SERPs in a timely manner. This can be disastrous for important content and new pages like product pages which could make you money.

To understand if your site is facing crawl budget limitations (or to confirm that your site is A-OK), you will want to:

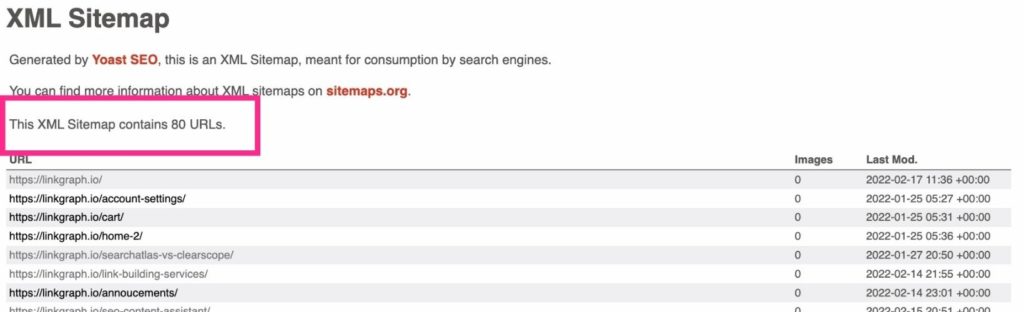

- Get an inventory of how many URLs are on your site. If you use Yoast, your total will be listed at the top of your sitemap URL.

- Once you have this number, use the “Settings” > “Crawl stats” section of Google Search Console to determine how many pages Google crawls on your site daily.

- Divide the number of pages on your sitemap by the average number of pages crawled per day.

- If the result is below 10, your crawl budget should be fine. However, if your number was lower than 10, you could benefit by optimizing your crawl budget.

How Can You Optimize for a Your Crawl Budget?

When the time comes that your site has become too big for its crawl budget–you will need to dive into crawl budget optimization. Because you cannot tell Google to crawl your site more often or for a longer amount of time, you must focus on what you can control.

Crawl budget optimization requires a multi-faceted approach and an understanding of Google best practices. Where should you start when it comes to making the most of your crawl rate? This comprehensive list is written in hierarchical order, so begin at the top.

1. Consider Increasing Your Site’s Crawl Rate Limit

Google sends requests simultaneously to multiple pages on your site. However, Google tries to be courteous and not bog down your server resulting in slower load time for your site visitors. If you notice your site lagging out of nowhere, this may be the problem.

To combat affecting your users’ experience, Google allows you to reduce your crawl rate. Doing so will limit how many pages Google can index simultaneously.

Interestingly enough, though, Google also allows you to raise your crawl rate limit–the effect being that they can pull more pages at once, resulting in more URLs being crawled at once. Although, all reports suggest Google is slow to respond to a crawl rate limit increase, and it doesn’t guarantee that Google will crawl more sites simultaneously.

How to Increase Your Crawl Rate Limit:

- In Search Console, go to “Settings.”

- From there, you can view if your crawl rate is optimal or otherwise.

- Then you can increase the limit to a more rapid crawl rate for 90 days.

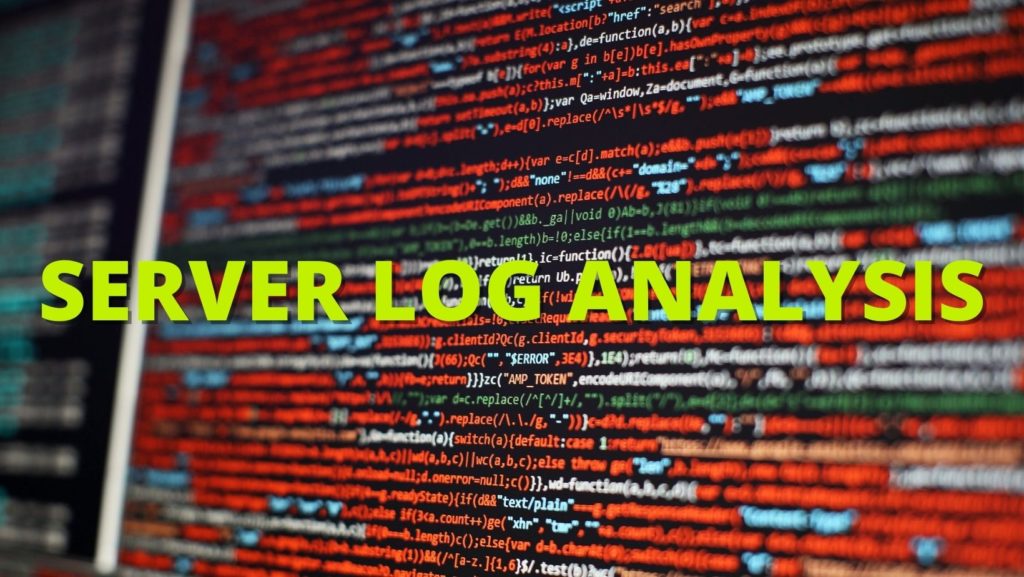

2. Perform a Log File Analysis

A log file analysis is a report from the server that reflects every request sent to the server. This report will tell you exactly what Googlebots do on your site. While this process is often performed by technical SEOs, you can talk to your server administrator to obtain one.

Using your Log File Analysis or server log file, you will learn:

- How frequently Google crawls your site

- What pages get crawled the most

- Which pages have an unresponsive or missing server code

Once you have this information, you can use it to perform #3 through #7.

3. Keep Your XML Sitemap and Robots.txt Updated

If your Log File shows that Google is spending too much time crawling pages you do not want appearing in the SERPs, you can request that Google’s crawlers skip these pages. This frees up some of your crawl budget for more important pages.

Your sitemap (which you can obtain from Google Search Console or Search Atlas) gives Googlebots a list of all the pages on your site that you want Google to index so they can appear in search results. Keeping your sitemap updated with all the web pages you want search engines to find and omitting those that you do not want them to find can maximize how webcrawlers spend their time on your site.

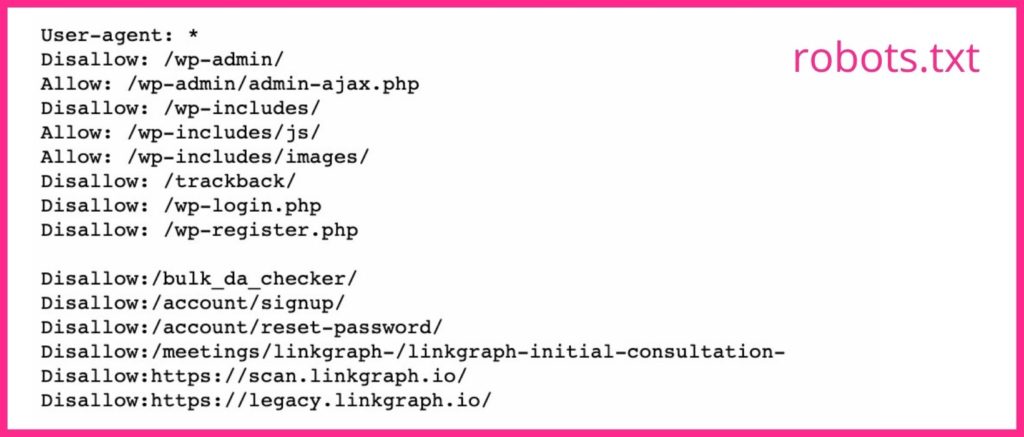

Your robots.txt file tells search engine crawlers which pages you want and do not want them to crawl. If you have pages that don’t make good landing pages or pages that are gated, you should use the noindex tag for their URLs in your robots.txt file. Googlebots will likely skip any webpage with the noindex tag.

4. Reduce Redirects & Redirect Chains

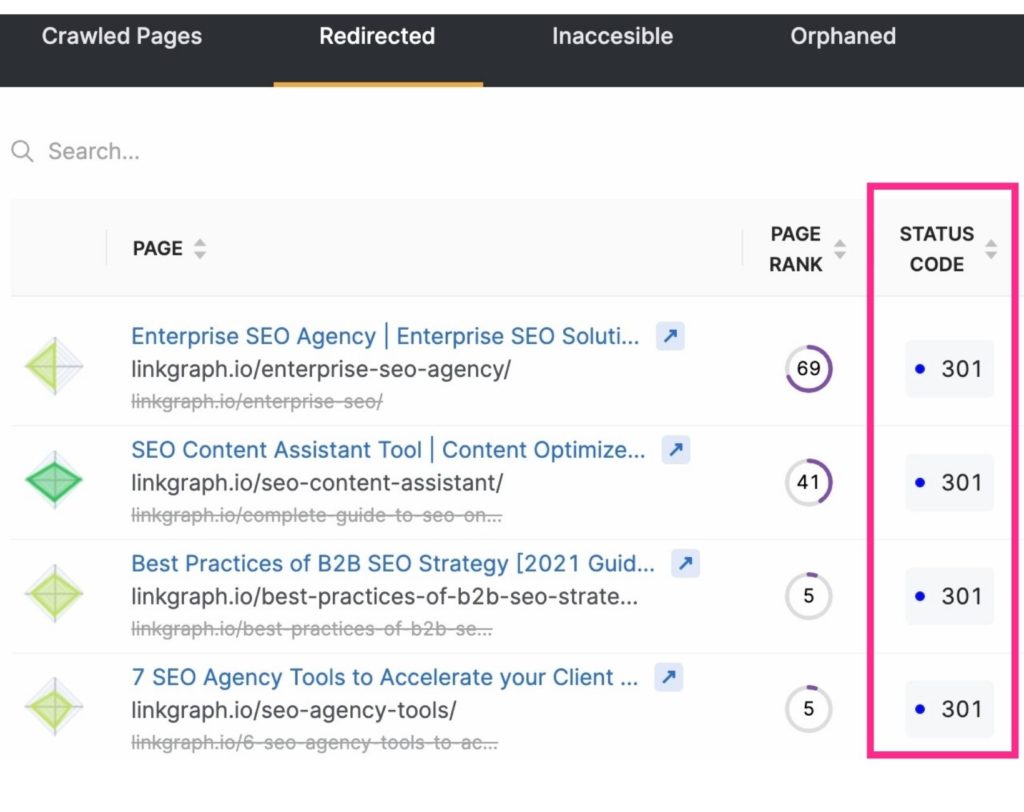

In addition to freeing up the crawl budget by excluding unnecessary pages from search engine crawls, you can also maximize crawls by reducing or eliminating redirects. These will be any URLs that result in a 3xx status code.

Redirected URLs take longer for a Googlebot to retrieve since the server has to respond with the redirect then retrieve the new page. While one redirect takes just a few milliseconds, they can add up. And this can make crawling your site take longer overall. This amount of time is multiplied when a Googlebot runs into a chain of URL redirects.

To reduce redirects and redirect chains, be mindful of your content creation strategy and carefully select the text for your slugs.

5. Fix Broken Links

The way Google often explores a site is by navigating via your internal link structure. As it works its way through your pages, it will note if a link leads to a non-existent page (this is often referred to as a soft 404 error). It will then move on, not wanting to waste time indexing said page.

The links to these pages need to be updated to send the user or Googlebot to a real page. OR (while it’s hard to believe) the Googlebot may have misidentified a page as a 4xx or 404 error when the page actually exists. When this happens, check that the URL doesn’t have any typos then submit a crawl request for that URL through your Google Search Console account.

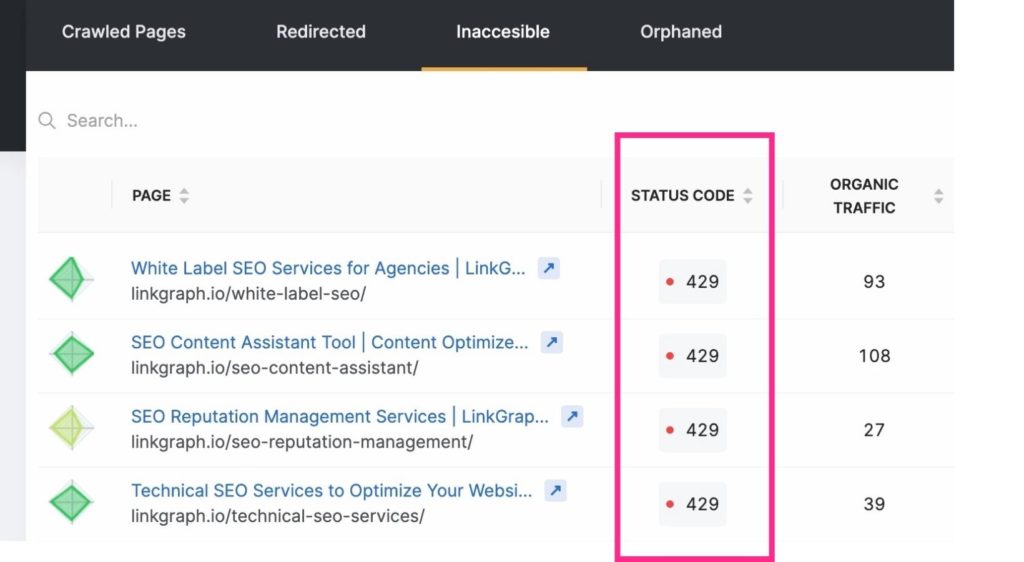

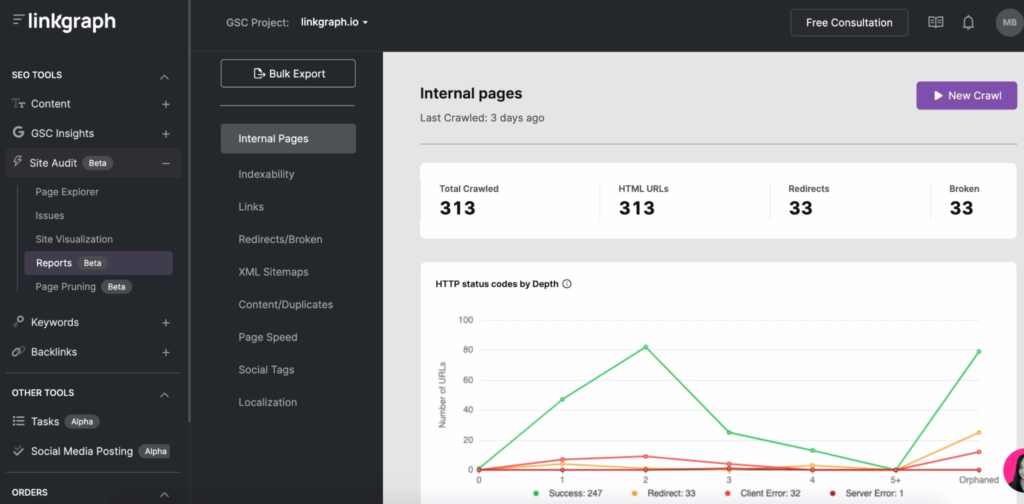

To stay current with these crawl errors, you can use your Google Search Console account’s Index > Coverage report. Or use Search Atlas’s Site Audit tool to find your site error report to pass along to your web developer.

Note: New URLs may not appear in your Log File Analysis right away. Give Google some time to find them before requesting a crawl.

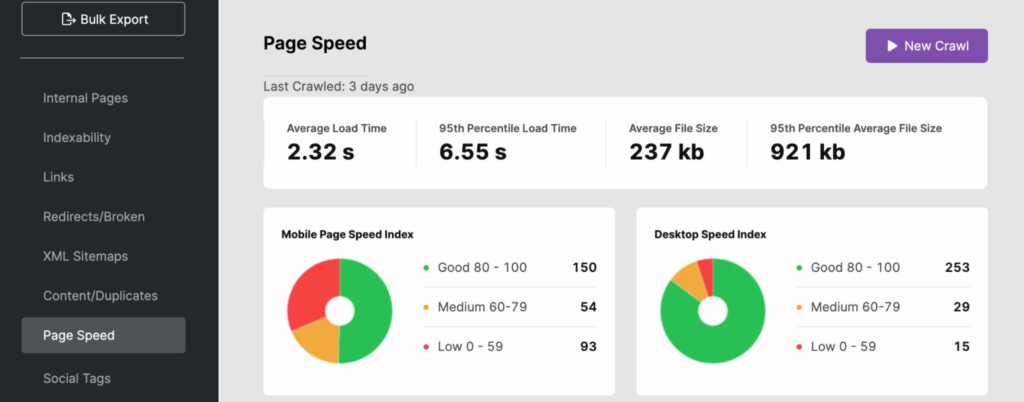

6. Work on Improving Page Load Speeds

Search engine bots can move through a site at a rapid pace. However, if your site speed isn’t up to par, it can really take a major toll on your crawl budget. Use your Log File Analysis, Search Atlas or PageSpeedInsights to determine if your site’s load time is negatively affecting your search visibility.

To improve your site’s response time, use dynamic URLs and follow Google’s Core Web Vitals best practices. This can include image optimization for media above the fold.

If the site speed issue is on the server-side, you may want to invest in other server resources such as:

- A dedicated server (especially for large sites)

- Upgrading to newer server hardware

- Increasing RAM

These improvements will also give your user experience a boost, which can help your site perform better in Google search since site speed is a signal for PageRank.

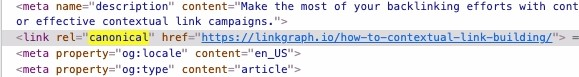

7. Don’t Forget to Use Canonical Tags

Duplicate content is frowned upon by Google—at least when you don’t acknowledge that the duplicate content has a source page. Why? Googlebot crawls every page unless inevitably, unless told to do otherwise. However, when it comes across a duplicate page or a copy of something it’s familiar with (on your page or off-site), it will stop crawling that page. And while this saves time, you should save the crawler even more time by using a canonical tag that identifies the canonical URL.

Canonicals tell the Googlebot to not bother using your crawl time period to index that content. This gives the search engine bot more time to examine your other pages.

8. Focus on Your Internal Linking Structure

Having a well-structured linking practice within your site can increase the efficiency of a Google crawl. Internal links tell Google which pages on your site are the most important, and these links help the crawlers find pages more easily.

The best linking structures connect users and Googlebots to content throughout your website. Always use relevant anchor text and place yoru links naturally throughout your content.

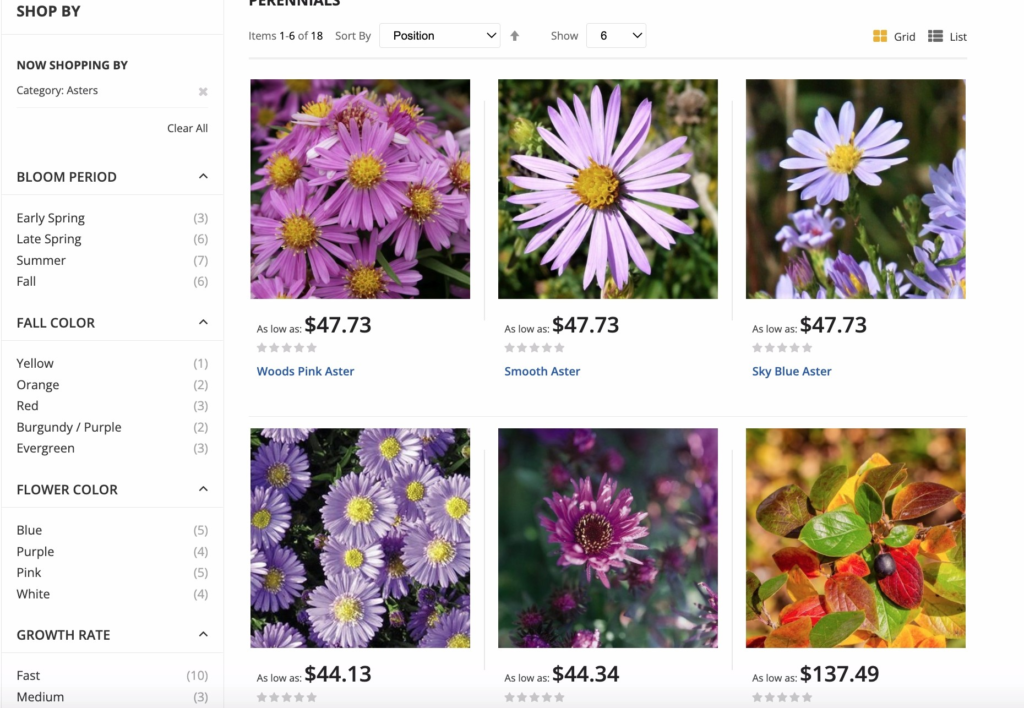

For e-commerce sites, Google has best practices for faceted navigation options to maximize crawls. Faceted navigation allows site users to filter products by attributes, making shopping a better experience. This update helps avoid canonical confusion and duplicate issues in addition to excess URL crawls.

9. Prune Unnecessary Content

Googlebots can only move so fast and index so many pages each time they crawl a site. If you have a high number of pages that do not receive traffic or have outdated or low-quality content–cut them! The pruning process lets you cut away your site’s excess baggage that can be weighing it down.

Having excessive pages on your site can divert Googlebots onto unimportant pages while ignoring pages.

Just remember to redirect any links to these pages, so you don’t wind up with crawl errors.

10. Accrue More Backlinks

Just as Googlebots arrive on your site then begins to index pages based on internal links, they also use external links in the indexing process. If other sites link to yours, Googlebot will travel over to your site and to index pages in order to better understand the linked-from content.

Additionally, backlinks give your site a bit more popularity and recency, which Google uses to determine how often your site needs to be indexed.

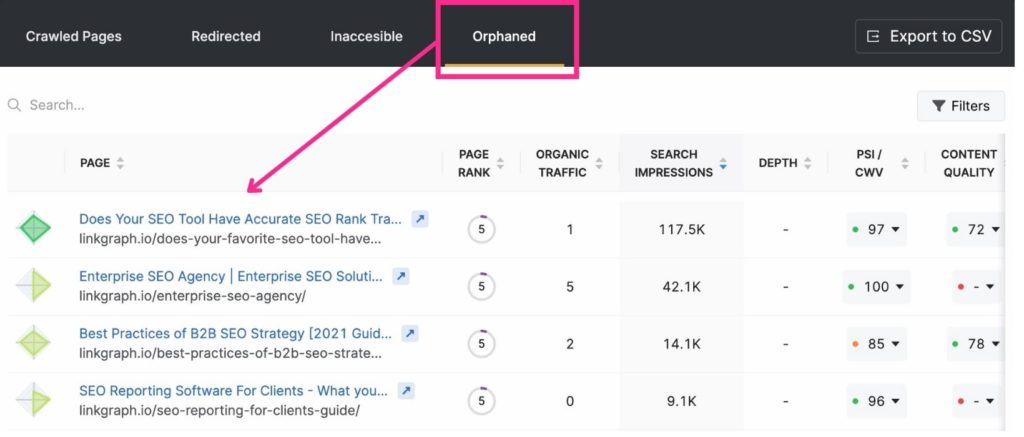

11. Eliminate Orphan Pages

Because Google’s crawler hops from page to page through internal links, it can find pages that are linked to effortlessly. However, pages that are not linked to somewhere on your site often go unnoticed by Google. These are referred to as “orphan pages.”

When it an orphan page appropriate? If it’s a landing page that has a very specific purpose or audience. For example, if you send out an email to golfers that live in Miami with a landing page that only applies to them, you may not want to link to the page from another.

The Best Tools for Crawl Budget Optimization

Search Console and Google Analytics can come in quite handy when it comes to optimizing your crawl budget. Search Console allows you to request a crawler to index pages and track your crawl stats. Google Analytics helps you track your internal linking journey.

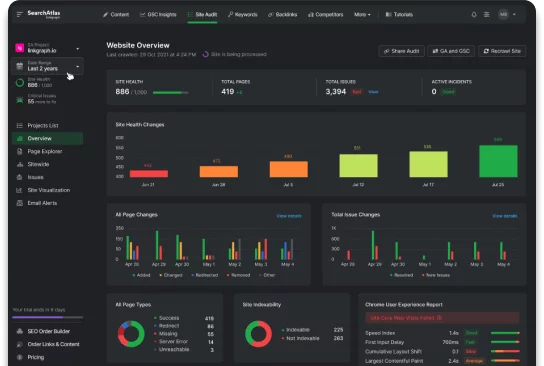

Other SEO tools, such as Search Atlas allow you to find crawl issues easily through Site Audit tools. With one report, you can see your site’s:

- Indexability Crawl Report

- Index Depth

- Page speed

- Duplicate Content

- XML Sitemap

- Links

Optimize Your Crawl Budget & Become a Search Engine Top-Performer

While you cannot control how often search engines index your site or for how long, you can optimize your site to make the most of each of your search engine crawls. Begin with your server logs and take a closer look at your crawl report on Search Console. Then dive into fixing any crawl errors, your link structure, and page speed issues.

As you work through your GSC crawl activity, focus on the rest of your SEO strategy, including link building and adding quality content. Over time, you will find your landing pages climb the search engine results pages.